Camera-to-Cloud RAW is the Start of the Computational Revolution

![]()

Adobe recently announced new Camera to Cloud integrations, following its recent acquisition of Frame.io. The Fuji X-H2S will become the first stills camera to natively shoot “to the cloud”. This might seem like a niche feature, however look beyond the headlines and this could be a generational step change. Not only because of the ability save to the cloud (like Google Photos) but because of what this then enables.

One of the biggest — I’d argue the biggest — limitations of the camera has been storage. In the analog world, significant sums of money were invested in streamlining the workflow from photographer to newsroom, which involved getting the film to begin with before developing and printing it. In a world with a dearth of visual reportage, a photo scoop was literally headline-grabbing.

Digital photography caused a paradigm shifft by offering instant image transfer and newsrooms around the world jumped ship, but the storage problems didn’t go away. For example, the first DSLR — the 1991 Kodak DCS-100 — used a Nikon F3 with a digital back connected to a battery pack, hard disk, and monitor. Fujifilm introduced the first fully integrated digital camera in 1988 with the DS-1P which was critical as it included a memory card and so truly local storage.

And that’s the paradigm we’ve been stuck in — memory card storage. The implosion of the camera market is a direct result of the cannibalization of sales by the smartphone; this isn’t because consumers don’t want to take quality photos. They do. However, they value internet connectivity much higher and the definition of “quality” is fluid, to say the least.

The success of the smartphone is from combining the PDA and camera into one device, producing photos that are “good enough.” This isn’t a playing field that camera manufacturers can compete on because most people don’t want to carry a phone and a camera; the consumer mass market is well and truly over. That said, what camera manufacturers have been focusing on is technological innovation to make significantly improved hardware and so produce much better quality photos. Any nod to connectivity has been notional — we have WiFi and Bluetooth implemented in pretty much all models on the market, but these are simple in application and intended for one-off copies or auto-backup. The camera remains the central device, with the smartphone adjunct to it.

In the meantime, smartphone manufacturers have been far more innovative in what they actually do with the images they capture and so we had the birth of computational photography. This was principally developed to mitigate the extremely poor image quality; remember the 2012 iPhone 5 shipped with an 8-megapixel (6.15 mm x 5.81 mm) sensor, which is in stark contrast to the abilities of Nikon’s 36-megapixel full-frame D800.

Combining multiple shots can significantly reduce image artifacts, which can then be hidden behind sharpening and resizing for social media applications. This basic concept can be extended to HDR, panorama, and night mode to name a few applications. With Google extending the concept of the RAW file to computational (multi-shot) RAW, you have the basis for a step-change in processing. Except — of course — camera manufacturers are largely ignoring this space. Yes, you do have panorama modes, but they are rudimentary and JPEG based. To be fair, Olympus has been one of the few manufacturers to try to innovate; the OM-5 has a plethora of modes such as handheld high-resolution shot mode, Live Neutral Density (ND) mode, focus stacking, Live Composite, Live Bulb, Interval Shooting/Time Lapse Movie, Focus Bracketing, and HDR.

Solutions to Camera Computational Photography

So what can manufacturers actually do to stem the tidal wave of smartphone innovation? There is a need to move beyond the paradigm offered by existing camera firmware of a stand-alone device that is solely designed to capture images. One solution is to mirror the smartphone solution and do this in-camera by producing an Android ILC. Samsung tried this with the Galaxy NX and, more recently, Zeiss introduced the ZX1. Neither was a mainstream success. Camera manufacturers appear unwilling to open their hardware up for smartphone integration, yet they are also unwilling to fundamentally revise existing firmware to allow an app store of algorithms for computational processing.

Nikon has the potential to implement a hybrid version of this approach through its innovative MobileAir app. This recognizes that you won’t have a laptop with you and that a cable connection is preferable. In a workflow not too dissimilar to PhotoMechanic, there is a catalog of images to which you can add IPTC metadata, perform basic edits, and then upload to the internet (such as an FTP server). This can be for a single photo, batches, or fully automated.

![]()

None of this is extraordinary except that it is achieved from a camera connected directly to a smartphone; quite what is happening, and where, remains somewhat opaque, although the RAW files cannot be leaving the camera (given their size) which means either the camera is exporting a JPEG for editing on the smartphone or that all the editing is achieved in camera.

Given that Fujifilm has long offered in-camera RAW processing (and indeed used the camera to do this when attached to a PC), I’d like to think that it is the latter. This would mean the app is a simple image catalog that gets the camera to render the image before uploading it to a remote server. This has the potential to be transformational by pushing interaction to the smartphone, but keeping image processing on the camera.

Could it also offer the potential to integrate new image processing algorithms that could be driven from the smartphone, possibly using a plug-in model where programmers could access lower-level processing options to build new libraries of functions? Could this be split into both camera (RAW) and smartphone (JPEG) operations? There is genuine scope to develop a co-dependent model of smartphone-camera existence that manufacturers could exploit.

Camera to Cloud

And this brings us to Adobe’s announcement of the Camera to Cloud (C2C) integrations for the Fuji X-H2S. This is the first genuine attempt to solve the camera storage problem by getting the RAW files off the camera as soon as possible. So how does the service work?

Frame.io was developed to allow real-time video editing collaboration and one aspect of this is real-time upload. If you have a Sony A7R IV then your uncompressed RAWs will be topping out at more than 100 megabytes which would take a seriously long time to transfer over a 3G connection. However, it’s the rollout of 5g which has the potential to open up new opportunities with speeds in the 15 to 30 megabytes per second range (and eventually much higher) meaning those images could be uploaded as you shoot them.

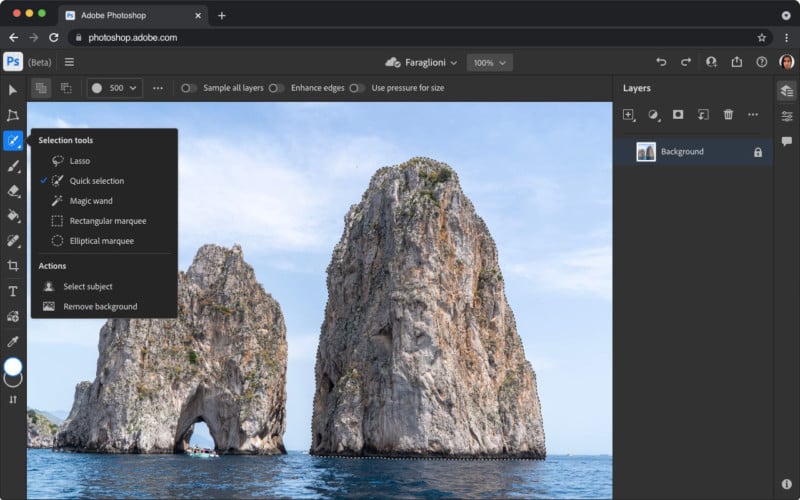

All of the sudden, the notion of using your camera, smartphone, or PC to process your images seems old hat. Storing your photos in the cloud, then using a smartphone or web app to process them makes much more sense. While Adobe has delivered its subscription apps via the web for more than a decade, it hasn’t been able to deliver a fully-featured dedicated web app in the way that Canva, Pixlr, or Fotor do, although Adobe Express is a nod in this direction and the company is bringing Photoshop to the browser very soon — and for free. When it does so, it will be transformative by providing photographers with familiar web-based tools.

Building on this base would be a series of automated workflows for standard computational techniques; for example, the camera could tag the next five images as a focus stack or HDR which would then automatically trigger the appropriate workflow and generate the output image. This could be downloaded straight back to the smartphone for immediate use or sent into another workflow for client upload, social media posting, or backup.

The benefit of the smartphone was integrating the camera; real-time RAW could see the integration of the web. Not only does this vision of the future move the computational element off-camera, but it also allows the paradigm to fit within existing firmware limitations. Pervasive 5G is the glue that binds together the highest quality imaging (the camera), with the best-in-class image processing to out-strip the smartphone at every level.

Quite how camera to cloud will develop remains to be seen and the Fujifilm X-H2S is currently the only camera that supports upload in this way. However, expect this to change as the service expands and Adobe develops the opportunities. Getting other camera manufacturers on board is critical; this could be via Adobe’s camera to cloud or other similar services. Either way, getting to a critical mass is essential in order to fully realize the potential. Roll on the future!

Image credits: Adobe / Frame.io