The Best AI Image Generators in 2024

![]()

Whether you like them or not, Artificial Intelligence (AI) image generators have exploded in popularity this year and the technology shows no signs of stopping.

So if you’re feeling confused about which AI Image generator you should use in 2024, this is a complete guide to the best options out there.

At a Glance

DALL-E 2

A product of the Elon Musk co-founded research lab OpenAI, DALL-E 2, which we’ll refer to as simply DALL-E, is the software most people can name when you ask them about AI text-to-image generators.

When it launched in April, DALL-E stunned social media with its ability to turn a brief description into a photo-realistic image.

For the few people with privileged access to the closed-off tool, DALL-E was so exceptional that it almost felt like magic — whether that involved generating pictures of “a raccoon astronaut with the cosmos reflecting on the glass of his helmet” or “teddy bears shopping for groceries in Ancient Egypt,” all from a simple text prompt.

"a raccoon astronaut with the cosmos reflecting on the glass of his helmet dreaming of the stars"@OpenAI DALL-E 2 pic.twitter.com/HkGDtVlOWX

— Andrew Mayne (@AndrewMayne) April 6, 2022

Ai text to image.

Here’s “Two teddy bears shopping for groceries in ancient Egypt” converted from Text to image.

Using OpenAI’s DALL-E 2.

Mad. pic.twitter.com/hUOWxrquyS

— murfin.eth (@JoeMurfin) April 11, 2022

Since then, DALL-E has gained a reputation as the leading AI text-to-image generator available. It is known for producing the best results and being one of the easiest systems to use.

DALL-E is by no means the only machine learning software that can generate images. So what is behind the AI generator’s unparalleled reputation? And why is the technology considered so groundbreaking and disruptive?

First of all, the fact that the images that DALL-E creates are visually appealing is a key component of its success. While other AI image generators often produce artworks that have an apocalyptic or darker tone to them, Dall-E creates images that are shockingly realistic and far more aesthetically pleasing to creators who already have a keen artistic sense.

When DALL-E broke on the scene, it represented a huge step forward in AI image generation technology. Compared to its predecessors, the software was the first to let users have an extraordinary degree of control over the style, subject, and attributes of the digital images they were creating, even letting users control the lens and aperture in their AI-generated “photos”. The technology seemed to allow endless possibilities in terms of image creation.

Early impressions of @OpenAI's DALL-E 2. 🧵

All images below were produced by AI, with me feeding it the quoted prompt. I was most curious about how helpful such a tool might be in creative work.

"A sloth playing a guitar, photograph 35mm lens" pic.twitter.com/EHOXlrAOl9

— Grant Sanderson (@3blue1brown) June 14, 2022

DALL-E also blew users away with its remarkable ability to understand text prompts better than any other software that preceded it. This is down to the fact that DALL-E uses OpenAI-owned GPT-3 — arguably the most advanced natural language machine learning algorithm — to convert text-based instructions into images.

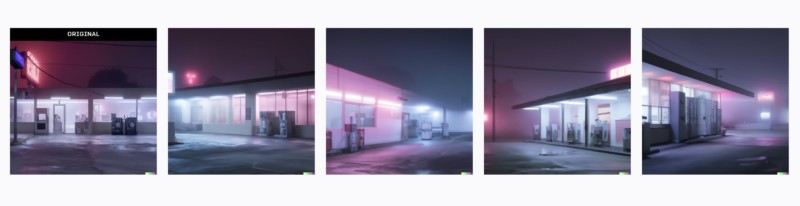

So how can you use DALL-E? As well as using it to turn sentences into images, you can also prompt DALL-E with an image. There are two ways to do this: a variation or an edit.

A variation simply prompts DALL-E with an image, rather than written text. In response to the picture provided, DALL-E generates a series of additional images, that mirror the aesthetic and subject of the original, but each image has its own twist.

Edits are the third way to prompt DALL-E and are perhaps one of the software’s most revolutionary features. You can provide an image and ask DALL-E to add a “baby elephant bathing” into a photograph of water, sharpen an out-of-focus ladybug, remove an object in an image or “make it nighttime”. The AI technology even understands things like reflections and will update these accordingly when editing.

DALL-E only generates square outputs. But by using its new editing feature “Outpainting”, you can extend an image beyond its original border.

Outpainting allows the users to expand an image outwards to a wider frame of view, creating larger pictures in any aspect ratio. By entering prompts into DALL-E, the machine will take into account the image’s existing visual elements to maintain the context of the original image. It uses shadows, reflections, and textures to create an AI background that is designed to blend perfectly with the original image.

Outpainting: August Kamp

These mind-blowing capabilities make DALL-E feel like it could be a powerful and important editing tool for photographers in the future.

OpenAI’s second-generation DALL-E 2 system has recently been made available to the public and anyone can now make an account.

Each DALL-E 2 account receives 50 free credits to use on the system and a further 15 credits each month. Additional credits will cost $15 per 115 credits, and each credit will bring you back four images for a prompt or instruction.

OpenAI explicitly says users “get full rights to commercialize the images they create with DALL-E, including the right to reprint, sell, and merchandise,” although admittedly this is still a legal grey area. The company has designed DALL-E 2 to refuse to create images of celebrities or public figures. The system also will not generate any explicit, gory, or political content.

How to get started: To sign up for DALL-E 2, click here.

Midjourney

Along with DALL-E and Stable Diffusion, Midjourney also ranks as one of the most popular and well-known AI text-to-image generators out there.

Considered one of the most evocative platforms for AI image generation, Midjourney made headlines when one of its users won a fine art competition using a picture he created with the software.

Somewhat uniquely, Midjourney is operated through a Discord server and uses Discord bot commands to generate high-quality images in a particularly artistic style. Users can input a text prompt to create clear and stunning images which seem to always have an apocalyptic or eerie quality to them.

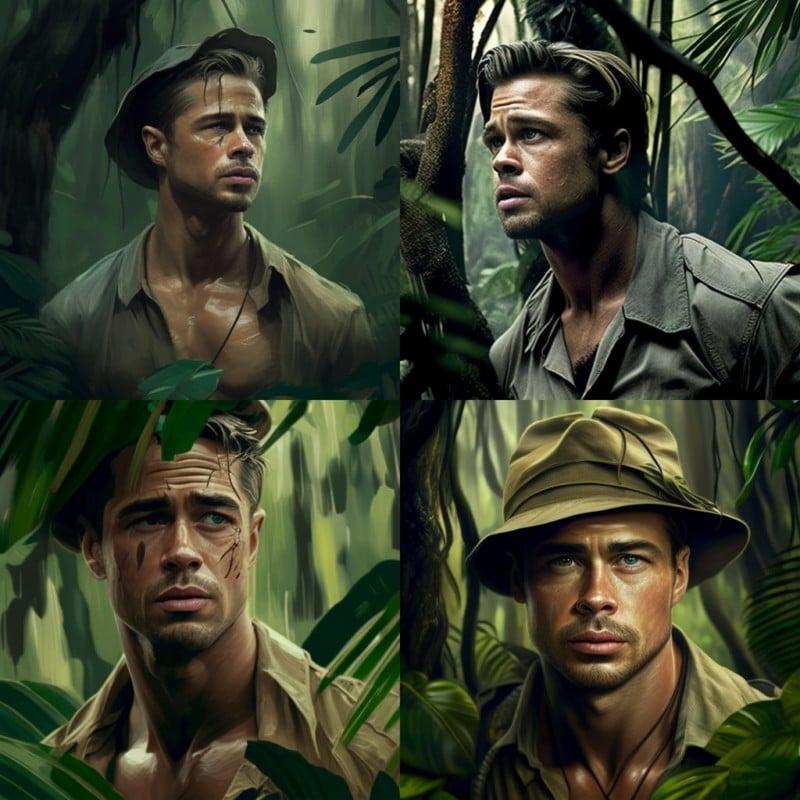

Unlike DALL-E, Midjourney will generate pictures of celebrities and public figures. Discord users often use the software to imaginatively visualize their favorite actors in certain film roles.

One possible drawback to Midjourney is that the software is extremely stylized as an AI text-to-image generator and it can be difficult to create photorealistic images.

Midjourney founder David Holz says that the system was never designed to create realistic-looking imagery and this is an important part of Midjourney’s philosophy as an AI generator.

“We have a default style and look, and it’s artistic and beautiful, and it’s hard to push [the model] away from that,” Holz tells The Verge. “Maybe if you spend 100 hours trying, you can find some right combination of words that makes it look really realistic, but you have to really work hard to make it look like a photo.”

“We are focused toward making everything beautiful and artistic looking,” adds Holz.

However several Midjourney users have proven that it is possible to create ultra photorealistic images on the software with an advanced knowledge of the required text prompts.

If there is one downside to Midjourney, it is that you have to use a Discord server to place a text prompt which can be tricky to understand at first. Discord’s interface can also be frustrating to use and you may often find your own AI art lost among a myriad of other user-generated queries on a channel.

However, according to Holz, this was always deliberate as Midjourney is intended to be a “social experience.” It can certainly be fascinating to see other users’ artwork as you wait for your image to load on Midjourney.

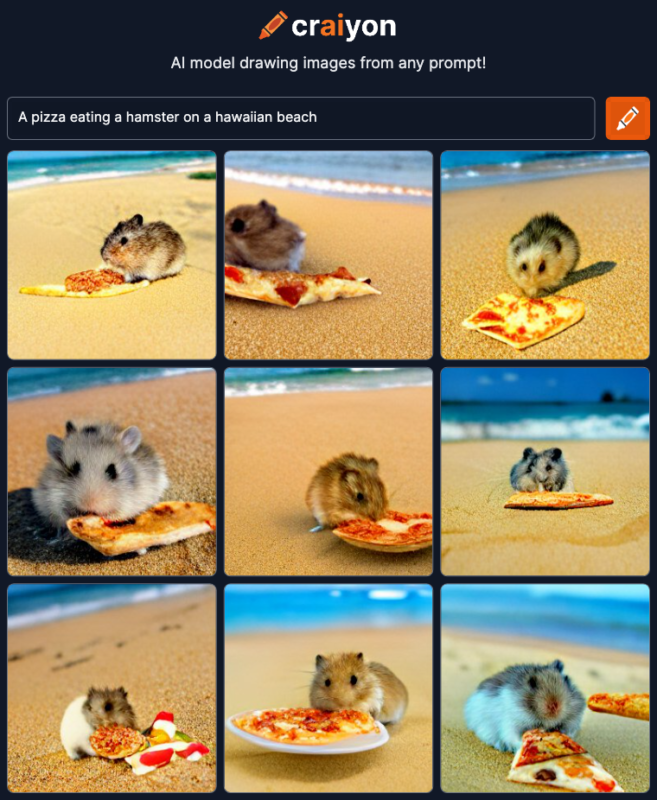

So how do you use Midjourney? The Midjourney platform opened to all as a beta in July. Once you have joined the Midjourney Discord server, the AI generator can be used on Discord’s web interface or on the Discord app.

In order to generate artwork on Midjourney, you need to then go on a channel on Discord, for example #newbies-126.

From there, you type the Bot command “/imagine” in the Discord channel. This command will automatically generate the “prompt:” text. This is when you describe what you want to see as an image.

You need to type your keywords for your image after the “prompt:” text or the command will not work. Then, you press return and wait for your artwork to be created.

So for example you could type “A pizza-eating hamster on a Hawaiian beach” and after around one minute, your image should be generated amongst other user requests.

The Midjourney server’s three rules when creating artwork are “don’t be a jerk, don’t use the bot to make inappropriate content, and be respectful to everyone.”

The first 25 images on Midjourney are free, and then the basic plan is $10 per month for 200 images. There is also a standard membership of $30 per month for unlimited use. Midjourney will allow corporate use of the generated images for a special enterprise membership of $600 per year. Otherwise, the images belong to you.

Once you get the hang of it, Midjourney is an excellent AI generator that consistently produces stunning and frequently thought-provoking images in its own unique style.

How to get started: To join the beta version of Midjourney, click here.

Stable Diffusion

While you might have to wait a long time to get access to DALL-E 2, there is an AI text-to-image generator that gets top marks for accessibility, and that is Stable Diffusion.

Developed by StabilityAI, in collaboration with EleutherAI and LAION, Stable Diffusion is an excellent AI image generator for those who want to start creating their own digital art now.

What makes Stable Diffusion special is Stability AI’s transparency with its software. The company has made Stable Diffusion’s source code openly available under the Creative ML OpenRAIL-M license. This is in stark contrast to competing models like DALL-E for example.

As Stable Diffusion is open source, users have already begun improving and building on the original code. There are dozens of repositories with different features and optimizations. A Reddit user even successfully created a Photoshop plug-in for Stable Diffusion. There is also a plug-in available for Krita.

It is this community and innovation around Stable Diffusion that makes the AI image generator so exciting for users, although admittedly it can be hard to navigate between the different repositories available online.

If you are looking for the original Stable Diffusion, you can either run the software on your computer or you can access the beta version of the Web interface on Dream Studio. When users sign up to DreamStudio they will be given 200 credits to use on Stable Diffusion but after that, £1 ($1.18) will buy 100 generations. Meanwhile, £100 (~$118) will buy 10,000 generations.

The beta version of Stable Diffusion can produce photorealistic 512×512 pixel images. As with DALL-E, you can type in a text prompt and the system will generate an image. Additionally, it can produce photorealistic artworks using an uploaded image combined with a written description.

To train the Stable Diffusion model, Stability AI used 4,000 Nvidia A100 GPUs and a variant of the LAION-5B dataset. Stable Diffusion is therefore capable of generating super-creative images of celebrities, cartoon characters, and public figures that OpenAI does not allow with DALL-E 2.

The quality of the images produced in Stable Diffusion can seemingly be very impressive. In a now-viral Reddit post, a user claimed to have used a text prompt combined with a sketch to generate a hyper-realistic image of a futuristic metropolis.

However, Stable Diffusion can be difficult to master compared to DALL-E and the beta version is not as advanced as its competitors. It can be tricky to get the balance of the image right and word the text prompt correctly in order to generate your desired image — although the company does provide a guide on this.

But Stable Diffusion is still a remarkable piece of technology and the software’s accessibility is a turning point for AI image generation.

How to get started: To use Stable Diffusion on your web browser, click here. To download Stable Diffusion on your computer, click here for more details.

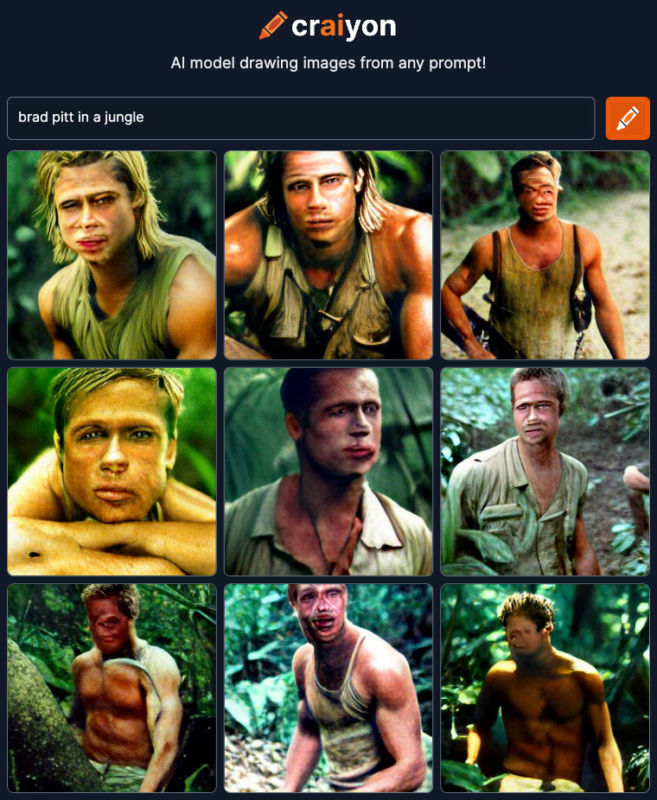

Craiyon (Formerly DALL-E mini)

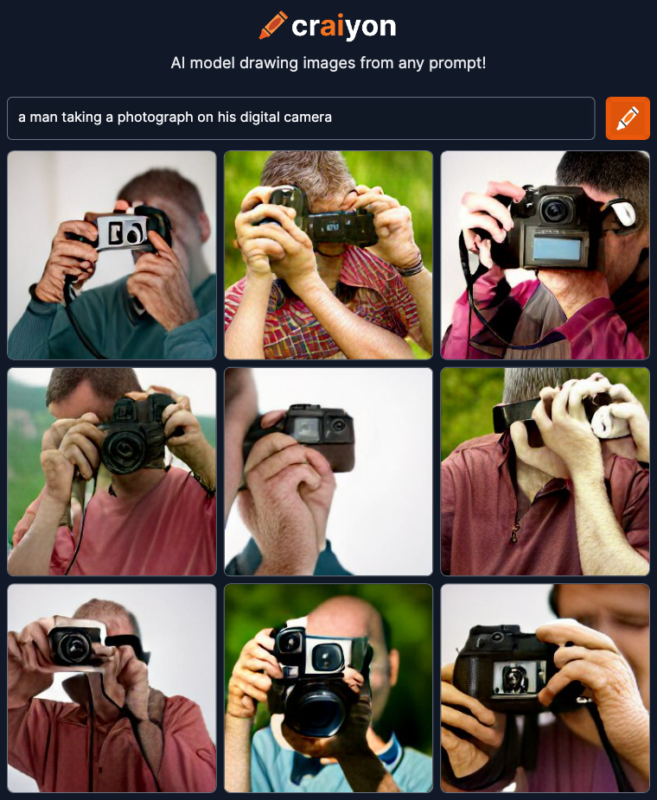

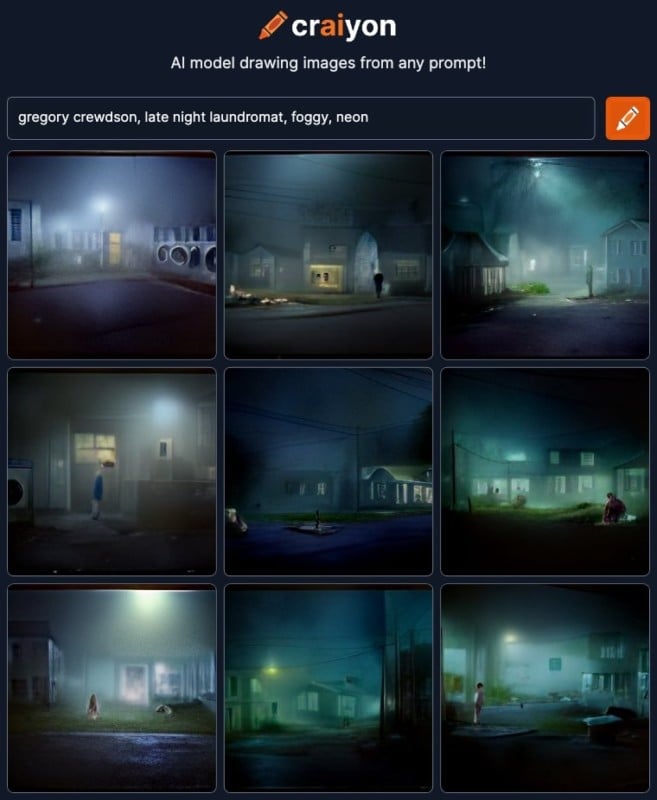

Formerly called DALL-E mini, Craiyon is another AI image generator that is available online.

Despite being previously named DALL-E mini, Craiyon has nothing to do with Open AI, other than making use of the large amount of publicly-available information OpenAI has provided on their model.

Unlike DALL-E, Craiyon is completely free to use and accessible to anyone through its website. All you need to do is enter a text prompt and Craiyon will take around two minutes to generate images from the interactive web demo.

Another key difference between DALL-E and Craiyon is that the software is not censored at all, meaning that absolutely any prompt will be accepted by the AI generator. You can also request the picture to be created in a certain style.

But Craiyon, which was created by software engineer, Boris Dayma, does struggle to match DALL-E and other competitors in terms of image quality. The faces of celebrities and cartoons can often be imperceptible in a generated artwork.

However, this does not mean that Craiyon is unable to make faces, it simply requires a lot of work and effort on the user’s part. Some Craiyon users have reportedly found that writing long and detailed prompts, listing the size and location of each part of the face has helped to create better faces on their artwork.

It is also only possible to download the images you create on Craiyon as a screenshot rather than a high-resolution file.

While it may not be the most state-of-art system, Craiyon is an unfiltered and fun AI generator that can be easily accessed by anyone.

How to get started: To use Craiyon, click here.

TikTok

TikTok has launched a basic AI image generator that users can use to make custom greenscreens for their videos.

The video platform’s new effect is called “AI Greenscreen” and allows TikTok users to type in a text prompt that the software will then generate as an image.

However, the basic text-to-image generator is a far cry from the likes of DALL-E 2 and Midjourney as it only appears to produce swirly, abstract images.

Training an AI image creator requires a high amount of computer power, so the basic appearance of TikTok’s foray is a clear marker of the difficulty of creating a bespoke AI image service.

TikTok’s tool highlights the explosion in popularity that AI image generators have had and could be the company’s first inroad into this burgeoning technology.

How to get started: To create an AI Greenscreen on TikTok, click here.

Picsart

![]()

Picsart is perhaps one of the most approachable text-to-image generators on mobile, as the app is already extremely popular and had generative AI added in at the end of 2022. The system is based on Stable Diffusion’s open-source code, but the company says it has done a significant amount of heavy lifting on its end to make it faster and produce higher-quality results.

What also makes Picsart different from other options is that the generator lives inside of a photo editing app, meaning that anything created can immediately be edited further using a suite of tools, which are full-featured and easy to operate and give users a chance to put a bit more of a human touch on the process.

Those benefits haven’t gone unnoticed, as less than a month after launch, Picsart’s AI image generator was being used to make more than a million images a day. The company has been steadily adding features to the platform as well, including the ability to replace objects in existing images, create new backgrounds, and create AI avatars based on photos of people (up to two at a time). Its generator can even turn rough sketches into finished pieces of art.

One thing to keep in mind though is that while Picsart’s baseline AI image generator is free, the additional features are not. Pricing varies, though many features are available through the $4.66 per month Picsart Gold.

How to get started: You can start making AI images through the Picsart iOS app or its web app.

Nightcafe AI

Nightcafe Studio allows you to generate photos in a number of different styles and offers various preset effects, which vary from cosmic to oil painting and more.

![]()

The name itself refers to The Night Café, a painting by Vincent Van Gogh. The platform uses the VQGAN+CLIP method to generate AI art.

The platform is easy for novices to get the hang of and it’s known for having more algorithms and options than other generators.

For more accomplished generators, artists can adjust the weight of a word in a prompt by adding modifiers in “advanced mode.” In this option, you can also control the digital art’s aspect ratio, quality, and runtime before the NightCafe AI produces it. Any previously created works of art can evolve to include fresh features.

When signing up to NightCafe the user receives five free credits. And every day, at midnight, the account will receive five more credits. To buy more, you can use PayPal, Apple Pay, Shopify, Visa, Mastercard, Google Pay, and American Express, to buy credits for as little as $0.08 per credit.

How to get started: To use NightCafe visit its website.