New Neural Network Could Majorly Improve Smartphone Photography

![]()

Glass Imaging uses artificial intelligence to “extract the full image quality potential from hardware on current and future smartphone cameras by reversing lens aberrations and sensor imperfections.”

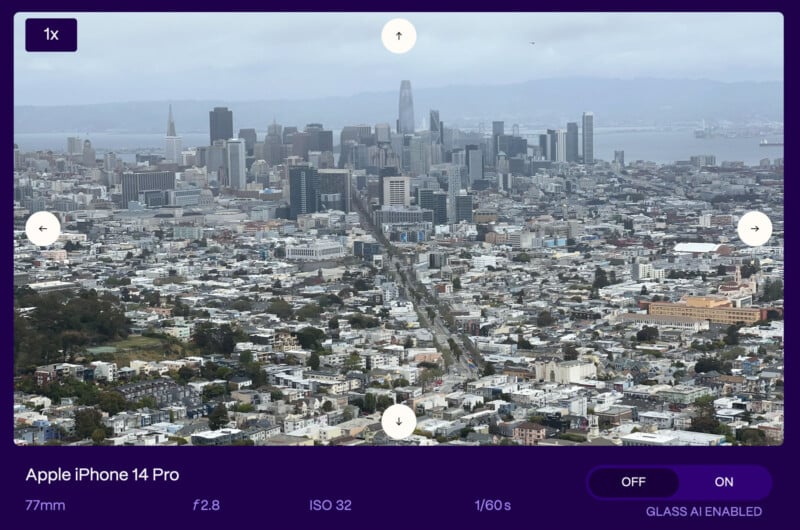

The company has put its impressive AI technology to work with the new iPhone 15 Pro Max smartphone, showing dramatic improvements from the flagship phone’s 5x zoom lens.

Glass Imaging Argues That Lackluster Software Holds Back the iPhone 15 Pro Max’s Telephoto Camera

The iPhone 15 Pro Max’s telephoto lens sports a unique tetraprism design and offers a 120mm equivalent focal length and f/2.8 aperture. The camera and lens combo is impressive from a technical perspective. However, the real-world results leave a bit to be desired.

“The full potential of this hardware is limited as the accompanying software from Apple doesn’t extract all the detail this lens can capture,” Glass Imaging claims.

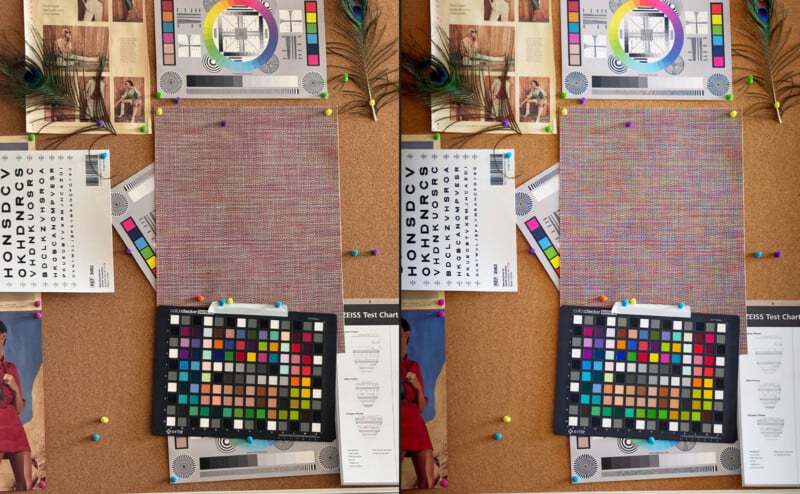

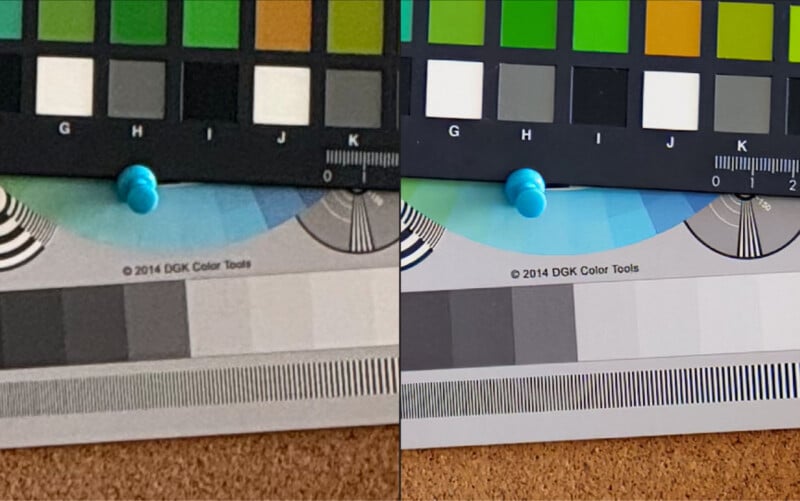

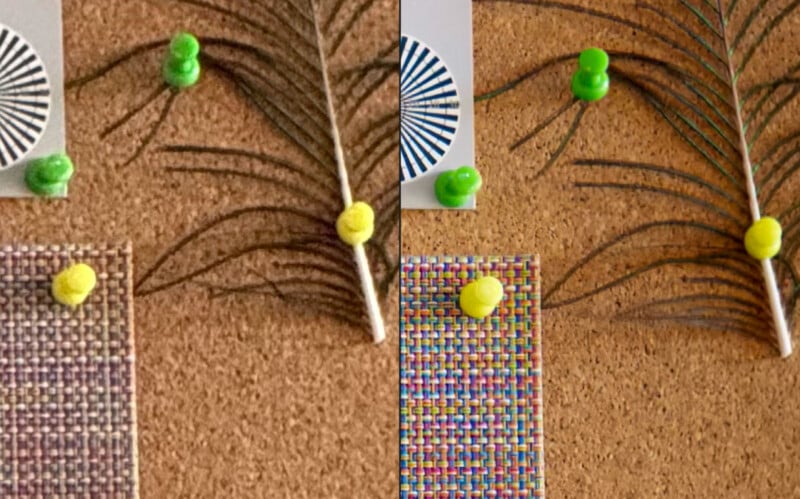

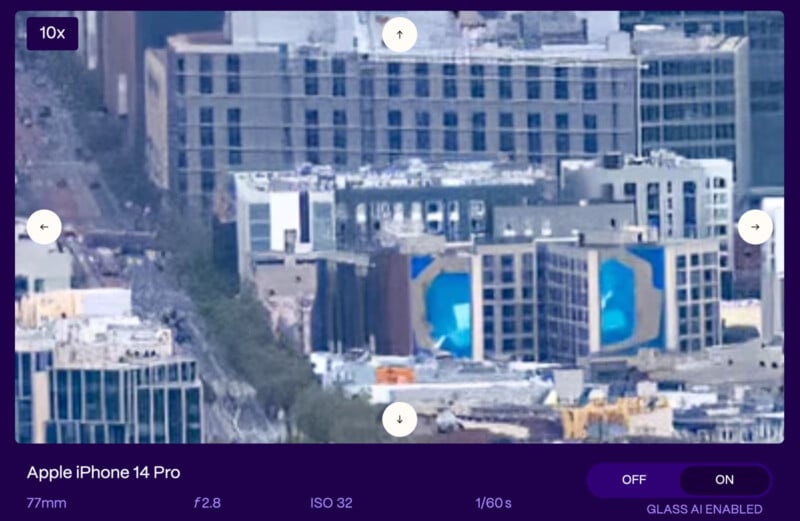

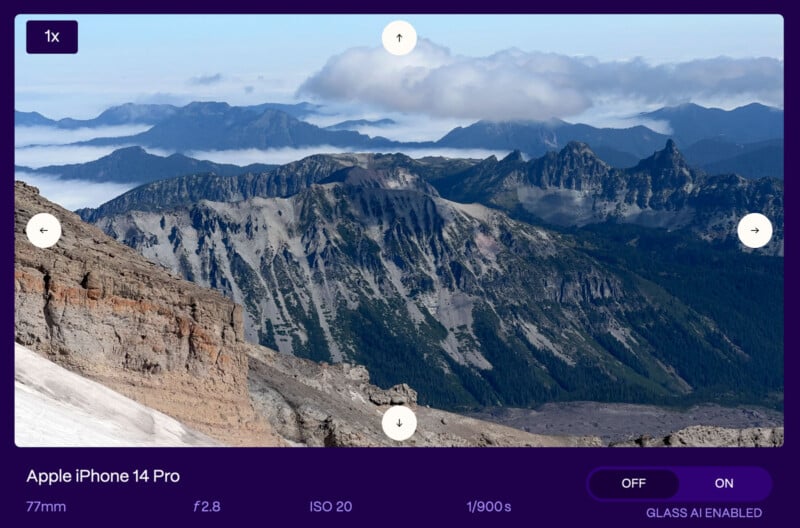

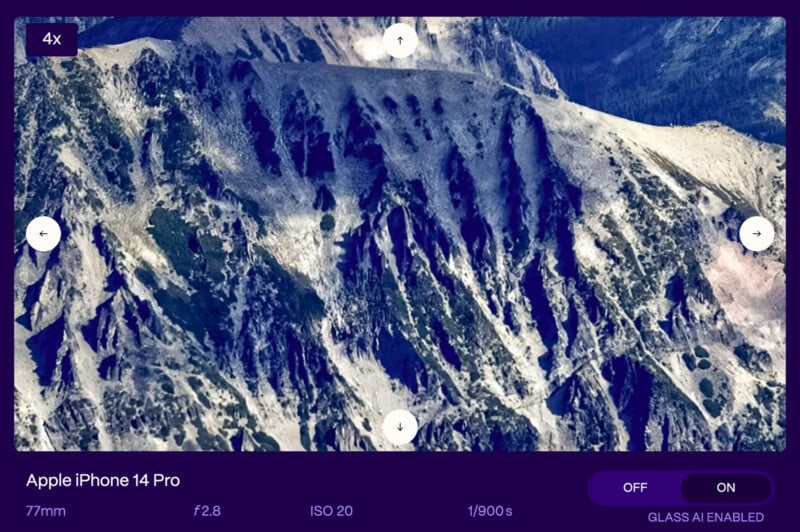

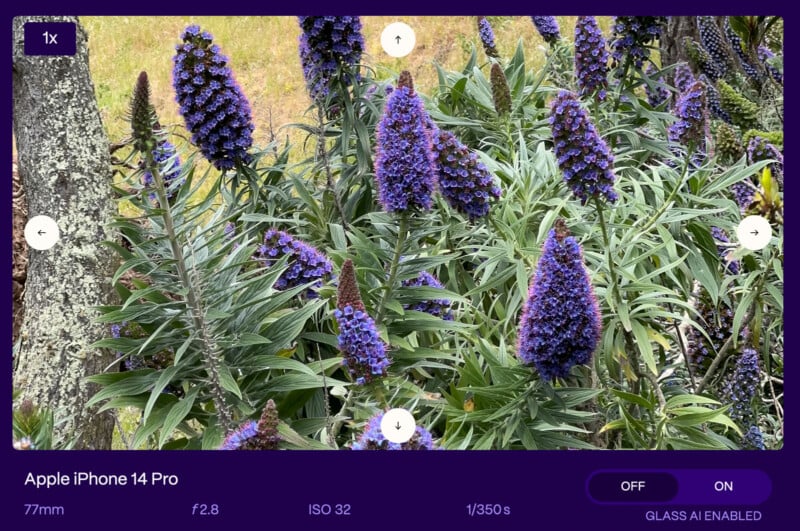

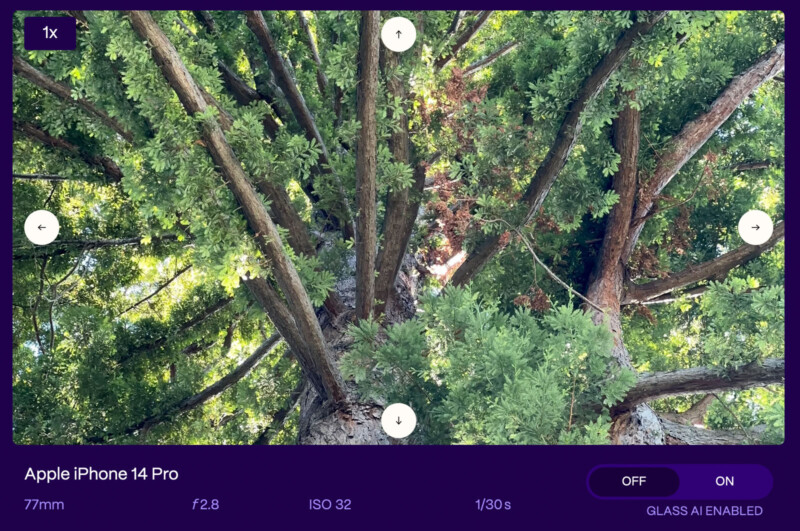

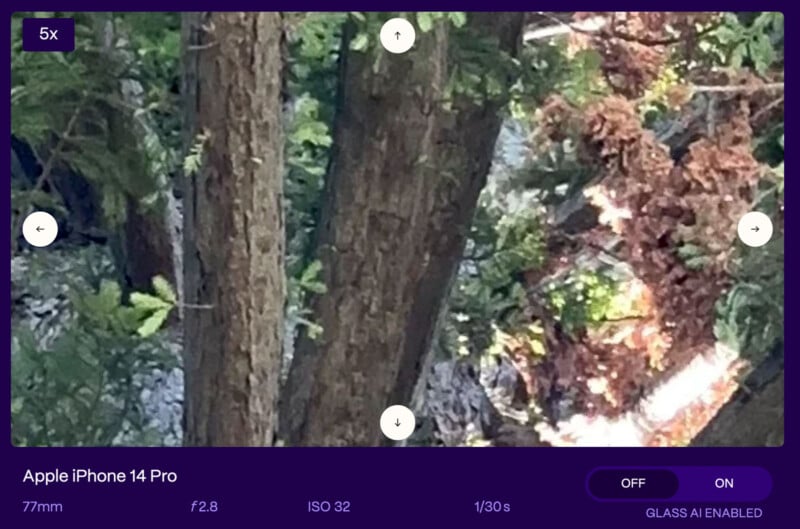

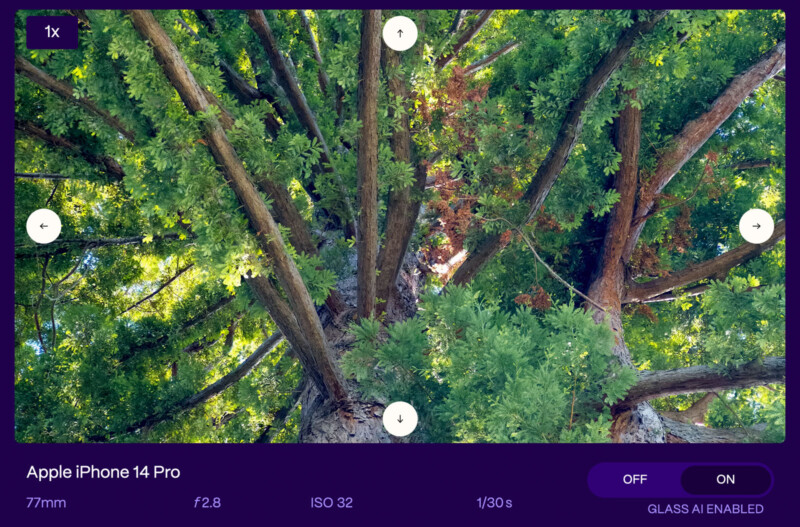

In a journal entry, Glass compares the iPhone 15 Pro Max’s default processing against its advanced GlassAI processing. The results demonstrate a significant overall image quality improvement, including better detail, clarity, and color accuracy.

Glass says that while Apple’s hardware is impressive, the iPhone’s native software “occasionally falls short of harnessing the full capabilities of the hardware,” potentially resulting in compromised image quality. Despite dedicating significant resources to its image signal processing and image processing algorithms, Glass argues that “there’s significant room for improvement.”

Neural Networks to the Rescue

So, what is Glass doing, and how does its AI processing improve image quality? In its own words, “Glass Imaging harnesses the power of artificial intelligence to get the most from the raw image burst data — the unprocessed sensor information captured during a shot. This AI-centric approach allows for intelligent decisions on image enhancement, going beyond the limitations of traditional handcrafted algorithms.”

At the heart of the company’s approach is neural network training. “At our labs, for each camera on that phone (wide, ultra-wide, tele, selfie), we train a custom Neural Network for low light, bright light, and super res (zoom),” Ziv Attar, Glass’s CEO tells PetaPixel.

“After training, we port these networks to the device’s chipset and optimize for speed and power consumption. Our neural networks take in a burst of RAW images and outputs a full processed image. Our Neural networks are trained to characterize the optical aberrations of each lens at any location on the sensor and are capable of correcting these aberrations,” Attar continues.

The entire training process takes “a few days.”

While the company cannot fully detail its “unique way” of “teaching” its AI to reverse lens aberrations (since it is a proprietary technology that the company is actively marketing to smartphone makers) Attar promises that GlassAI’s results are “more true and noise free compared to traditional processing approaches,” such as those that Apple is utilizing.

“The data (photons) are there but iPhone and other phones must apply very aggressive color denoise in order to remove color noise which is very disturbing. When they do that, they desaturate colors on fine details,” Attar explains.

“You don’t see this in big cameras as it’s mostly a problem of tiny pixels that are noisy and suffer from signal cross contamination between neighboring pixels that are of different color,” he continues. “Our networks are able to look at a large area and understand what pixels lie on what thread or object and handle the color noise removal in a much smarter way.”

The result is more accurate color information in the output image. Attar says that original iPhone images in Glass’s examples appear less colorful in part because default image processing mixes up small, colorful pixels with neighboring pixels when performing denoising.

Glass AI is “fundamentally improving reconstruction of existing data that exists on the sensor,” and is not performing any sort of synthesis of new information or creating “fake” data.

Is There a Downside to Neural Networks?

If this AI processing approach delivers such impressive results — as it does — then it should be a pretty easy sell. Is there any downside?

Attar says “there is no downside,” but admits that training high-quality neural networks is extremely challenging. Further, there are relatively high processing demands to handle the neural networks, which technology has only been able to achieve in the “the last year or so,” in his words.

“There were neural processors on Android and Apple devices for a few years now but they were not capable of handling pixel level operations and were only used for semantics and global operations like automatic white balance, automatic exposure, etc.,” Attar explains.

Further, the neural network processing requires time to execute its operations, even on swift, powerful mobile devices like the iPhone 15 Pro Max. However, the processing is pretty quick. A 12-megapixel image captured by the Pro Max’s telephoto camera takes about half a second, says Attar, and he says that the standard iPhone processing of a 12-megapixel file “takes longer than that.” So, practically speaking, processing time shouldn’t be a major inhibitor.

The user also doesn’t need to wait for the processing to complete to continue to shoot. However, it does take some time to see the final output file: the initial image on the screen is a low-quality version. However, even while processing occurs, Attar says the photographer can continue shooting.

He also says that despite the higher image quality, the image files are smaller because they have less noise, and noise makes files more challenging to compress. So, again, what is the cost here?

“The only cost is you need to develop tools to train these networks: large labs, robotic equipment, tons of software that controls the training procedure,” Attar says. While that all sounds expensive, it seems feasible for large companies to achieve.

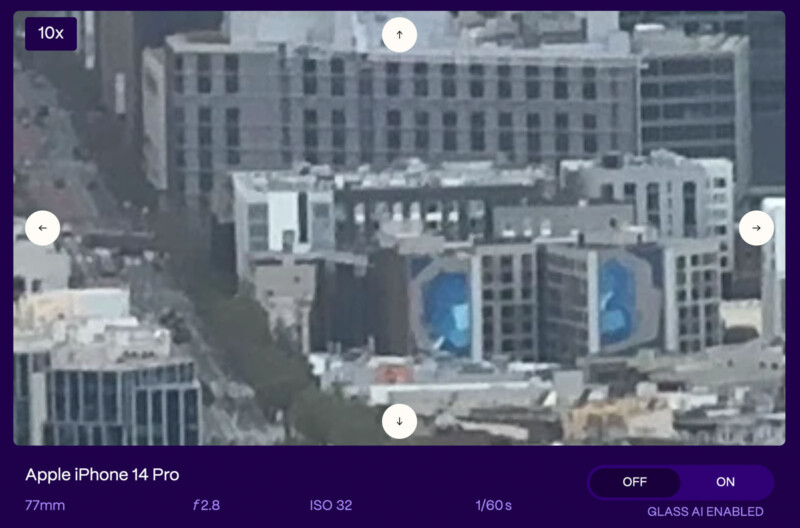

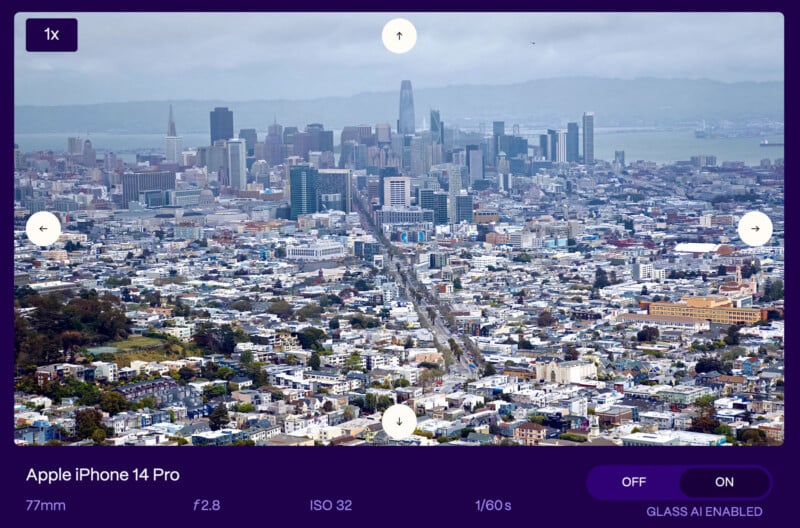

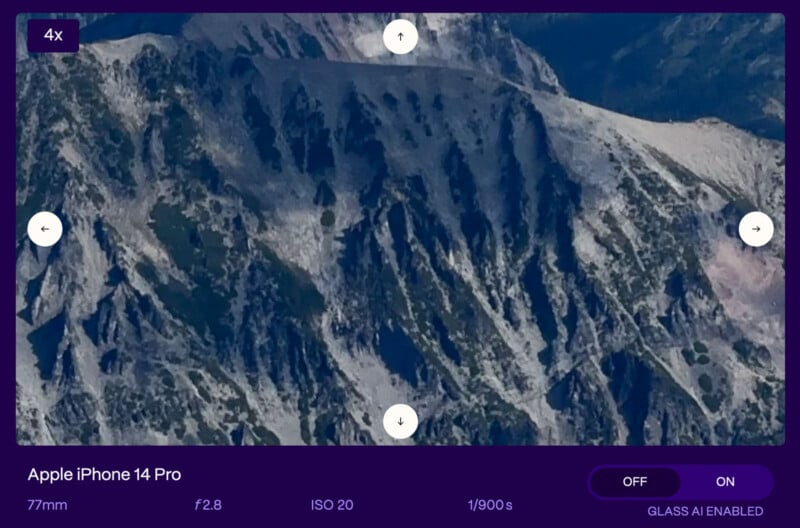

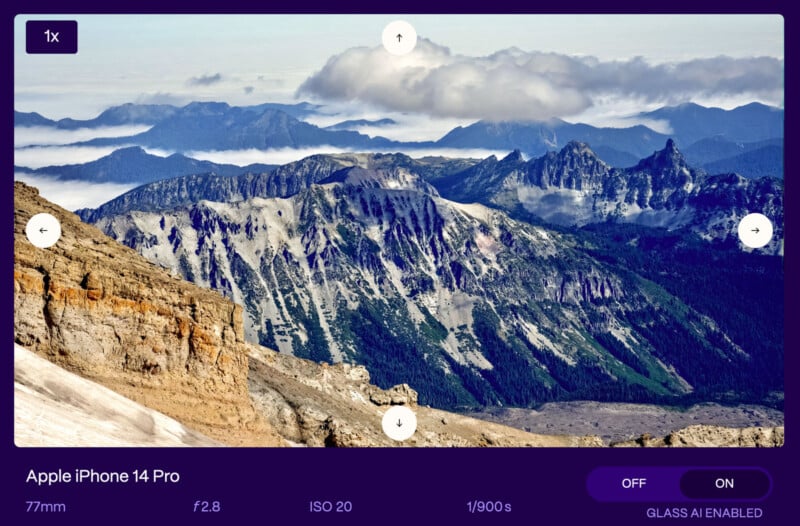

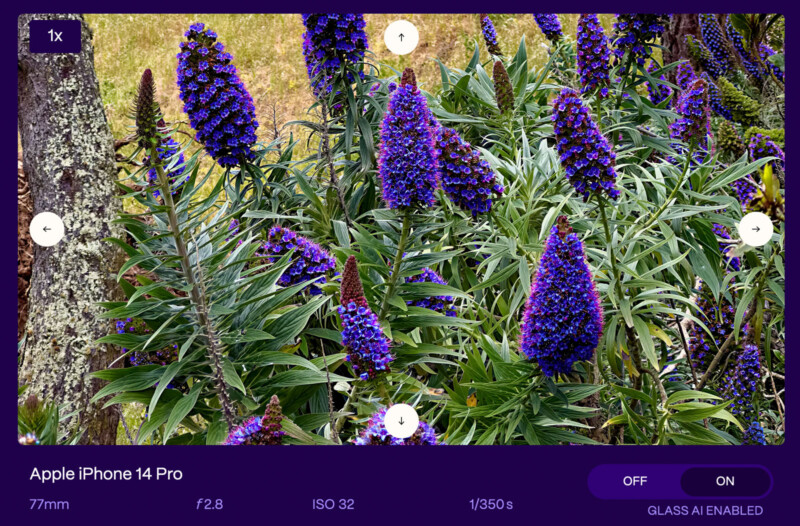

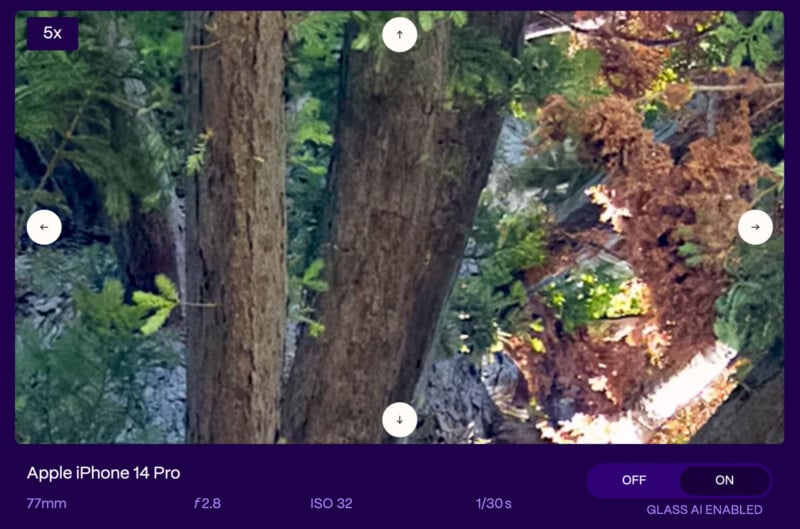

Additional Examples of Glass AI and Last Year’s iPhone 14 Pro

What’s In the Pipeline for Glass Imaging?

“We are working to sell our software to phone makers around the world. The product is an SW library that includes custom-trained Neural Networks for each camera on a phone (selfie, wide, ultra-wide, tele…). Our solution will be in some phone models in 2024,” says Attar.

The company will also consider an iPhone app to allow end users to achieve impressive results. However, it will not be available “very soon,” if it ever hits the market. The company’s primary focus is selling its core technology services to manufacturers so that its AI can be implemented natively.

Image and caption credits: Glass Imaging