US Man Faces 70 Years in Prison For Creating 13,000 AI Child Abuse Images

A U.S. man has been charged by the FBI for allegedly producing 13,000 sexually explicit and abusive AI images of children on the popular Stable Diffusion model.

A U.S. man has been charged by the FBI for allegedly producing 13,000 sexually explicit and abusive AI images of children on the popular Stable Diffusion model.

Several major players in the artificial intelligence field pledged to protect children online, marking another chapter in the progression of AI safety.

An internet watchdog agency warned that the rise of artificial intelligence (AI) generated child sex abuse images online could get even worse -- if controls aren't put on the technology that generates deepfake photos.

A sleepy town in Spain has been rocked by an AI image scandal that saw nude pictures of children passed around.

A new report has revealed how child safety investigators are struggling to stop thousands of disturbing artificial intelligence (AI) generated "child sex" images that are being created and shared across the web.

A cache of child sexual abuse photos has been allegedly shown to TikTok moderators during training. This is according to workers who say they were granted insecure access to illegal photos and videos.

Computer scientists at the University of Groningen have created a system to analyze the noise produced by individual cameras to help law enforcement fight child exploitation.

A new report found that Facebook was accidentally elevating harmful content for the past six months instead of suppressing it. A second report found that its internal policies may have resulted in the underreporting of photos of child abuse.

Apple has quietly removed all references to its highly controversial plan to scan iPhone photo libraries for child sexual abuse material (CSAM). The company previously postponed its plans in response to backlash but may abandon them entirely now.

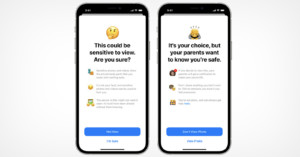

Apple's controversial plan to scan iPhone photo libraries in order to protect children -- a technology that was widely criticized by tech and privacy experts -- has been delayed for at least a few months.

Is Apple actually snooping on your photos? Jefferson Graham wrote an article last week warning this based on the company's child safety announcement. An attention-grabbing headline? Certainly. Accurate? It’s complicated.

Just one day after Apple confirmed that it plans to roll out new software that will detect child abuse imagery on iPhones, WhatsApp's head took to Twitter to call out the move as a "surveillance system" that could be abused.

The photos on your iPhone will no longer be private to just you in the fall. The photos are still yours, but Apple’s artificial intelligence is going to be looking through them constantly.

Following a report earlier today, Apple has published a full post that details how it plans to introduce child safety features across three areas: in new tools available to parents, through scanning iPhone and iCloud photos, and in updates to Siri and Search.

Apple is reportedly planning to scan photos that are stored both on iPhones and in its iCloud service for child abuse imagery, which could help law enforcement but also may result in increased government demands for access to user data.

Magnum Photos and prominent photojournalist David Alan Harvey are under scrutiny online today after some of Harvey's photographs labeled as 'Teenage' 'Thai Prostitutes' from 1989 surfaced in the Magnum archives, where users could purchase the images or share them online.

Editor's Note: None of the images in this post are graphic, but the content and captions might be upsetting to some.

The realities revealed by photography are not always of the pleasant variety, because for all of the sunsets and kittens and weddings in the world -- all wonderful and worth capturing -- there is suffering and horror and pain that is just as worthy of our photographic attention.

Photographer Mariella Furrer has spent over a decade of her photographic career focusing on the latter, documenting the stories of the survivors and families of child sexual abuse in South Africa.