Apple Confirms it Will Scan iPhone Photo Libraries to Protect Children

![]()

Following a report earlier today, Apple has published a full post that details how it plans to introduce child safety features across three areas: in new tools available to parents, through scanning iPhone and iCloud photos, and in updates to Siri and Search.

The features that Apple will roll out will come later this year with updates to iOS 15, iPad OS 15, watchOS 8, and macOS Monterey.

“This program is ambitious, and protecting children is an important responsibility. These efforts will evolve and expand over time,” Apple writes.

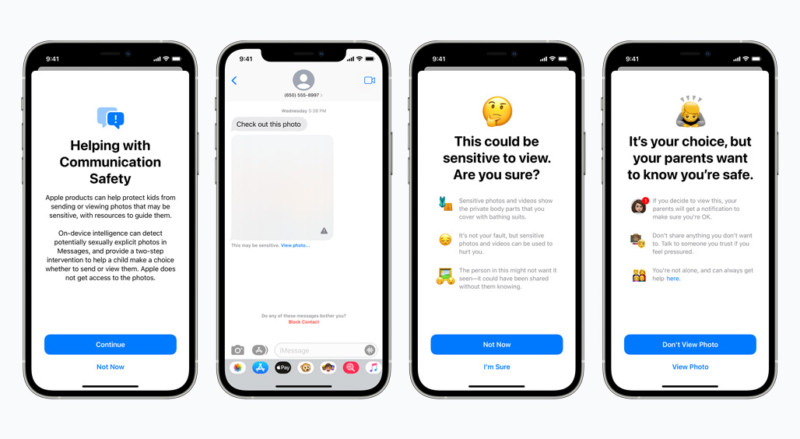

The messages app will include new notifications that will warn children and their parents when they are about to receive sexually explicit photos. When receiving this type of content, the photo will be blurred and the child will be warned, presented with helpful resources, and reassured it is okay if they do not want to view this photo.

Additionally, the targetted child will be told that their parents will get a message if they choose to view it to make sure that they are safe. Apple says that similar protections are available if a child attempts to send sexually explicit photos: the child will be warned before they send it, and parents can receive a message if the child sends it anyway.

“Messages uses on-device machine learning to analyze image attachments and determine if a photo is sexually explicit. The feature is designed so that Apple does not get access to the messages,” Apple explains.

Apple also plans to add CSAM detection, which stands for Child Sexual Abuse Material. It refers to content that depicts sexually explicit activities involving a child. The new system will allow Apple to detect known CSAM images stored in iCloud Photos and report them to the National Center for Missing and Exploited Children (NCMEC).

Apple says that its method of detecting CSAM is “designed with user privacy in mind.” In lieu of scanning images in the cloud, the system will perform on-device matching using a database provided by NCMEC and other child safety organizations. Apple then transforms this database into an unreadable set of hashes that are securely stored on users’ devices.

Therefore, before a photo is stored in iCloud Photos, an on-device matching process is performed for that image against the known CSAM hashes, and that matching process is “powered by a cryptographic technology called private set intersection, which determines if there is a match without revealing the result.”

Apple says that it uses another technology to ensure the contents of the safety vouchers cannot be interpreted by Apple unless the iCloud Photos account crosses a threshold of known CSAM content.

“The threshold is set to provide an extremely high level of accuracy and ensures less than a one in one trillion chance per year of incorrectly flagging a given account,” Apple says.

Only when that threshold is exceeded will it allow Apple to interpret the content of the vouchers associated and the company will manually review each report to confirm if there is a match. If one is found, Apple will disable the user’s account and send a report to NCMEC. Those who feel that they have been mistakenly flagged can appeal to have their account reinstated.

Apple is also expanding guidance in Siri and Search by providing additional resources to help children and parents stay safe or aid them in getting help with unsafe situations.

Spent the day trying to figure out if the Apple news is more benign than I thought it was, and nope. It’s definitely worse.

— Matthew Green (@matthew_d_green) August 5, 2021

When the initial reports emerged that Apple was planning to scan users’ iPhones and iCloud accounts, John Hopkins University professor and cryptographer Matthew Green raised concerns about the implementation.

“This is a really bad idea,” he wrote. “These tools will allow Apple to scan your iPhone photos for photos that match a specific perceptual hash, and report them to Apple servers if too many appear. Initially I understand this will be used to perform client side scanning for cloud-stored photos. Eventually it could be a key ingredient in adding surveillance to encrypted messaging systems.”

The rest of his concerns can be read in PetaPixel’s original coverage.