AI Empowers Fake Photos and Disinformation in Ways Photoshop Never Could

![]()

Since digital image editing tools like Adobe Photoshop hit the scene in the late 1980s, there have been heightened concerns about whether photos can be trusted. This long-time worry has become an increasingly common refrain among artificial intelligence’s most ardent defenders, but it doesn’t serve as a sound defense against criticisms of AI.

Fake Photos Are Much Older Than Photoshop and Even Older Than AI

“Fake photos” have been a general issue in society since photography itself was invented in the 19th century. Charlatans, scammers, and liars have used doctored photographs to peddle myths and falsehoods for a very long time, much longer than Photoshop or even computers have existed.

The resulting worries about truth and the skepticism surrounding photos increase with each innovation that makes creating deceptive images easier. Broadly, when something new and disruptive hits the scene, it’s often met with a mix of excitement, skepticism, and concern. This cycle isn’t unique to photography and is certainly not exclusive to Photoshop or artificial intelligence.

However, the type of hand-waving appearing with increasing regularity whenever someone raises concerns over the ease with which people can create convincing, photorealistic fake photos using generative AI technology is, at best, silly. At worst, it’s a dangerous conflation that seeks to discredit genuine concerns about the safety of AI and the hazards it poses to society.

Post by @stopsatgreenView on Threads

The Hazardous Conflation of AI-Generated Disinformation and Digital Photo Editing

One basic argument is this: If people were worried about Photoshop making it impossible to trust photos 30-plus years ago, and society hasn’t collapsed, these concerns lobbed at generative AI are less worthy of consideration. It’s a sort of “been there, done that” view toward fears over AI.

Another is: Disinformation through photography isn’t new with generative AI. It has been possible to create fake photos since Photoshop was invented.

And there is admittedly some appeal to both arguments. The core concern, whether an image is genuine or not, is as old as photography itself. There’s no question people have been able to create misleading photos for a very long time. Further, it’s clear that Photoshop made it easier than it was before and has only made it more accessible as the software has gone through evolution after evolution, year after year. Anyone with a bit of time on their hands, a good understanding of light, color, and tones, and a sinister motive can do damage by manipulating photos.

However, creating a meaningful equivalence between Photoshop and generative AI on the basis of either argument is, in my view, absurd. There are substantive differences between creating disinformation in Photoshop and using generative AI that make the scale of the potential damage of each so different that they’re hardly on the same planet, let alone in the same ballpark.

There’s a Vital Difference in Scale and Ease of Disinformation

There’s no barrier to entry to creating anything you want using generative AI. At least with Photoshopping, as it’s generally understood, there are: skill, time, and money. If one is so inclined, there are ways around that last one, but the two former constraints are still standing.

Creating a photorealistic “fake” photo in Photoshop, without using the Firefly AI tools now featured in the software, requires existing photographic assets, sophisticated cutting and masking, and the skills to put everything together in a way that doesn’t raise suspicion. A bad Photoshop job, which won’t convince anyone of anything, still takes a lot more time to do than typing a text prompt into an AI image generator. A good Photoshop job takes even longer.

The time required is an inherent barrier to using Photoshop to create disinformation campaigns, whether malicious or not. This has a dramatic impact on scale. You give 100 people AI image generators, imagine how many images they can create with no skill in a minute. What can a hundred talented digital artist create from scratch in a minute? Nothing or next to nothing.

While generative AI still isn’t perfect, it is far beyond the point where it can create a photorealistic image out of thin air that will fool a lot of people, especially when viewed in the relatively small form most content takes on social media. It takes seconds.

Post by @chriswelchView on Threads

But no, the ability to create a fake image isn’t new. AI didn’t invent the concept of deceptive photos, but it has transformed it in a way that Photoshop never did, and AI has arrived at a time when proliferating content, nearly unchecked, is swifter and more straightforward than ever.

However, it’s still important to acknowledge Photoshop’s role in spreading and subsequently strengthening a general lack of trust in photos. The seeds of doubt were planted long before artificial intelligence hit the scene. Photoshop popularized the concept that what you “see” might not be “real” or “true.”

However, throughout Photoshop’s life, fake photos have generally been an aberration.

They aren’t anymore, and that has nothing to do with Photoshop and everything to do with AI image generators, the way they are created, the inability or unwillingness of anyone to regulate them, the ease with which they can be used to foment deception, and how images are shared and consumed at large.

Proliferation of AI Technology Comes at a Time When People Don’t Trust Media

I’d be remiss not to touch on not only how images are created and shared, but who shares them because it matters a lot to the discussion on the dangers of AI and fake photos.

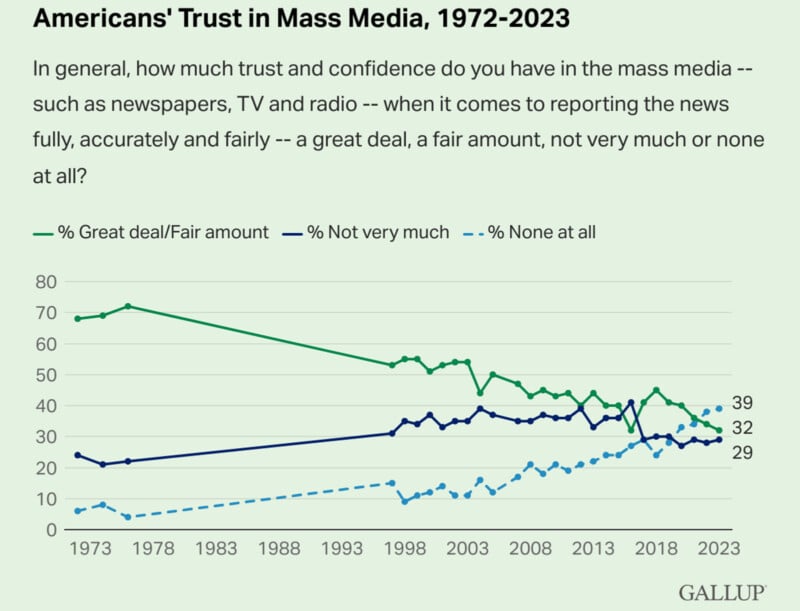

Americans’ trust in mass media at large has been steadily decreasing for the past 50 years, decades before Photoshop became the household name it is today. In 1976, 72% of Americans trusted the media, compared to just 4% who didn’t trust it at all.

In 2023, those who don’t trust the media were up to 39%, while those who do trust it either “a great deal” or “fairly” were down to a dismal 32%. Those who don’t trust the media very much sat at 29% last year.

This matters because established media organizations are beholden to standards and rules of integrity. They cannot publish a fake photo as easily as a nameless user on X (formerly Twitter) and certainly cannot do so without consequences.

For the relatively few photojournalists who have run afoul of these standards over the years, they have been, by and large, quickly blacklisted and ejected from the world of news. People’s careers have been destroyed by photo edits that are benign by today’s standards.

Any nameless person, who can generate a fake image as fast as they can type the nonsense they want to create and share, might see their misleading post flagged with a “misinformation” label or, in extreme cases, maybe even have their account banned. The scarce few times that happens, it is of little consequence.

If people didn’t have such deep-seated distrust in media, it wouldn’t matter much what Larry Liarson or Debbie Deception posts on social media. But that’s not the world we live in and not the world AI was born into.

Post by @michaelbtechView on Threads

Just Because the Fears Sound the Same Doesn’t Mean the Dangers Are Identical

In some ways, the democratization of news and information has been a boon for society at large. Some valuable stories have only gotten out into the world because it’s easier for people to share information than ever before. But the sword cuts both ways.

Just because fear of change is essentially universal to the human condition doesn’t mean that anxieties about AI are unfounded. But I get it; it can feel like fear-mongering. It can be tiresome and repetitive.

“Oh, we’ve heard this story before,” right?

At its most basic level, the story may be the same, but the way Photoshop and generative AI tell it is different in significant ways and at vastly different scales.

Photos used to be considered accurate representations of reality unless there was some reason to doubt them, even though fake images have existed for 150-plus years. Having “photographic evidence” meant something, mainly because most photos were authentic, and creating false, misleading images took time, effort, and ability.

Photoshop absolutely weakened people’s belief in truth in photography; it opened the door to the idea that what you see, even if it looks real, might be a lie. That door was cracked long before Photoshop arrived, by the way.

Now AI has arrived and kicked that door open, knocked down the surrounding walls, burst all the pipes, smashed everything, and blew the building up. The door isn’t just open. It’s gone. There is no door, and the long-weakened barrier between truth and fiction in photography has been destroyed. Photos will never have the authority they once had.

The onus is now on someone to prove that a photo is real. “It looks real” holds little weight anymore; the default view for most people is that they can’t trust what they see. Unfortunately, establishing that a photo is real requires a hell of a lot more effort than it does to create a fake photo using AI and share it on social media. Worse yet, that post somehow has more credibility than a newspaper. That wasn’t the case when Photoshop released.

Image credits: Header photo created using assets licensed via Depositphotos.