AiSee is a Discreet Wearable That Tells Blind People What They’re Holding

Developed by researchers at the University of Singapore, AiSee is a discreet wearable that combines a 13-megapixel camera with a bone conductive speaker that is able to identify objects a wearer is holding and is meant to be especially useful for blind grocery shoppers.

The Augmented Human Lab is a group of researchers that explores different ways to lower the barriers between technology and humans for the purpose of improving lives. Its members work on developing novel interfaces and interactions that are seamless for a user and can provide, for example, enhanced perception.

That’s where the AiSee comes in, as it is designed to make life easier for the blind who are often greatly challenged by what those without the disability would consider mundane activities. The current testing round is being led by researchers from the University of Singapore.

“Accessing visual information in a mobile context is a major challenge for the blind as they face numerous difficulties with existing state-of-the-art technologies including problems with accuracy, mobility, efficiency, cost, and more importantly social exclusion,” the Augmented Human Lab explains.

“The Project AiSee aims to create an assistive device that sustainably change how the visually impaired community can independently access information on the go. It is a discreet and reliable bone conduction headphone with an integrated small camera, that uses Artificial Intelligence, to extract and describe surrounding information when needed. The user simply needs to point and listen.”

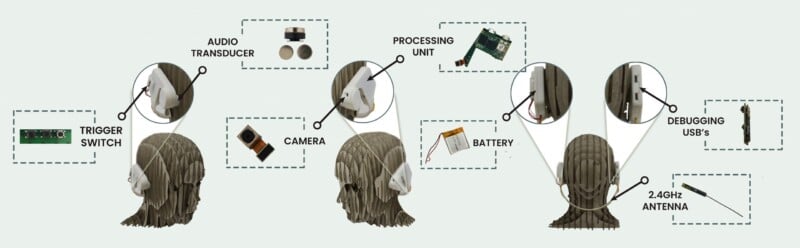

As reported by New Atlas, the AiSee connects two bone conduction earphones by a band that goes around the back of a wearer’s neck. Bone conduction earphones differ from traditional earbuds or headphones because they send sound waves through a wearer’s skull instead of through the ear drum, allowing them to hear without needing to plug the ear canal. One of the earphones features a 13-megapixel front-facing camera that is able to see what would be in the wearer’s field of view while the other features a touchpad for control. The battery and wireless connection systems are located on the band at the rear of the device.

“With AiSee, our aim is to empower users with more natural interaction. By following a human-centered design process, we found reasons to question the typical approach of using glasses augmented with a camera. People with visual impairment may be reluctant to wear glasses to avoid stigmatization. Therefore, we are proposing an alternative hardware that incorporates a discreet bone conduction headphone,” lead researcher of Project AiSee Associate Professor Suranga Nanayakkara, who is from the Department of Information Systems and Analytics at NUS Computing, says.

When a user picks up an item, the camera takes a photo of it which it then sends to the cloud for real-time analysis. Using an AI-based algorithm, the object is analyzed and identified, and any text written on it is transcribed. That is then sent back to the wearer and communicated via the earphones.

AiSee’s solution is the latest in attempts by various organizations to make daily life easier for those with visual impairment. Earlier this week, Google revealed a feature for the Pixel 8 smartphone that allows blind or visually impaired users to better take selfies, for example.

The research team from the University of Singapore is currently in discussions with SG Enable in Singapore to conduct user testing to help refine and improve AiSee’s features and performance.

Image credits: University of Singapore