Meta Sees Sharp Rise in AI-Generated Fake Profile Photos

Meta, the parent company of Facebook, is seeing a “rapid rise” in fake profile photos generated by artificial intelligence (AI).

As AI technology, like “generative adversarial networks” (GAN), becomes more widely available, anyone can create a lifelike deepfake face in a matter of seconds.

![]()

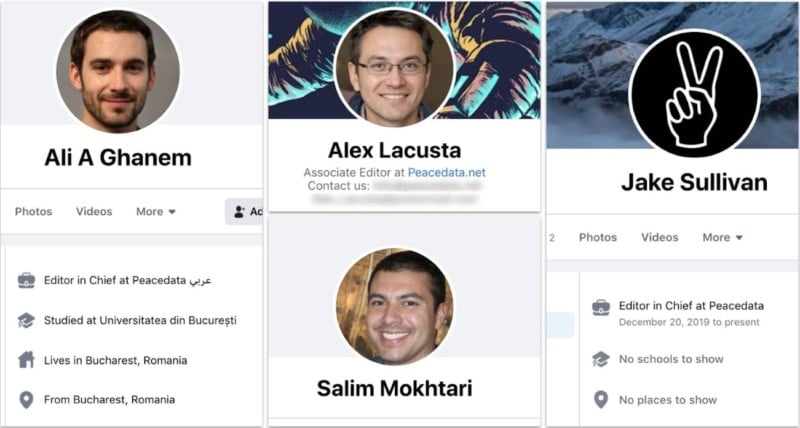

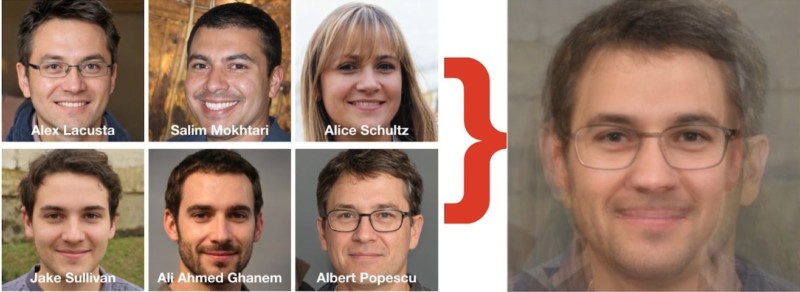

According to a Meta report published on Thursday, more than two-thirds of the threat actors it found and took down this year used profile pictures that were generated by AI software.

A threat actor, also known as a malicious actor, is any person or organization that causes harm or compromises security in the digital sphere.

“More than two-thirds of all the [threat actor] networks we disrupted this year featured accounts that likely had GAN-generated profile pictures, suggesting that threat actors may see it as a way to make their fake accounts look more authentic and original,” Meta writes in the report.

Easier Than Stealing a Real Photo

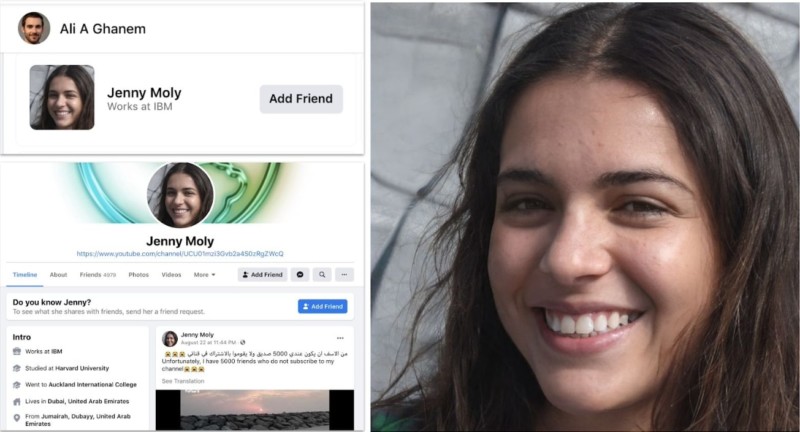

According to a recently published study, AI-generated faces have become so realistic, people have just a 50 percent chance of guessing correctly whether a face is real or fake.

“It looks like these threat actors are thinking, this is a better and better way to hide,” Ben Nimmo, who leads global threat intelligence at Meta, tells NPR.

“That’s because it’s easy to just go online and download a fake face, instead of stealing a photo or an entire account.”

“They’ve probably thought…it’s a person who doesn’t exist, and therefore there’s nobody who’s going to complain about it and people won’t be able to find it the same way,” Nimmo says.

Telltale signs

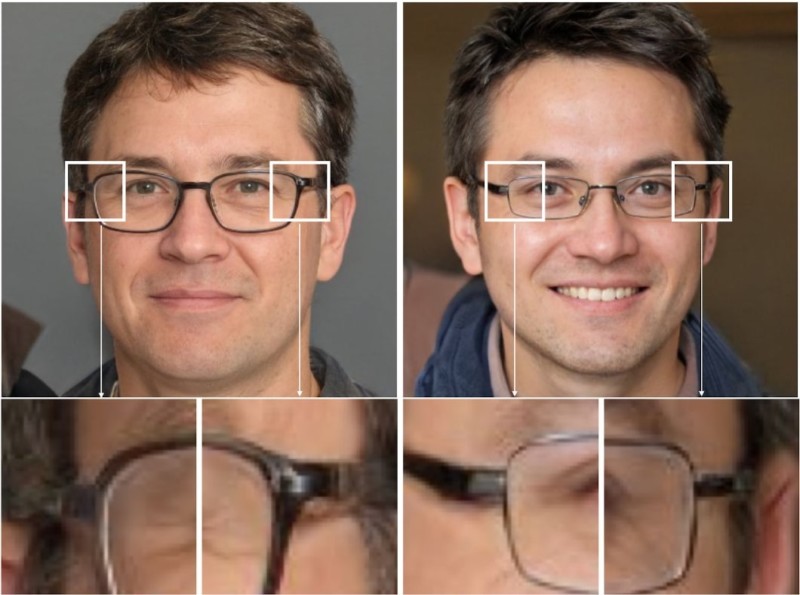

However, according to Nimmo, AI-generated profile pictures often have tell-tale signs that humans can learn to identify — such as peculiarities in their ears and hair, the alignment of the eyes as well as unusual clothing and backgrounds.

“The human eyeball is an amazing thing,” Nimmo tells NPR. “Once you look at 200 or 300 of these profile pictures that are generated by artificial intelligence, your eyeballs start to spot them.”

This has made it easier for trained investigators at Meta and other companies to spot these AI-generated profile photos.

Investigators will “look at a combination of behavioral signals” in the images. This is an advancement over using reverse-image searches to identify stock photo profile photos.

CBS News reports that more than 100 countries have been the target of what Meta refers to as “coordinated inauthentic behavior” (CIB) since 2017.

Meta says the term refers to “coordinated efforts to manipulate public debate for a strategic goal where fake accounts are central to the operation.”

According to Meta’s report, “the United States was the most targeted county by global [CIB] operations we’ve disrupted over the years, followed by Ukraine and the United Kingdom.”

Russia led the charge as the most “prolific” source of coordinated inauthentic behavior, with 34 networks originating from the country. Iran (29 networks) and Mexico (13 networks) also ranked high among sources.