Instagram Now Hides ‘Inappropriate’ Photos from Explore and Hashtags

![]()

If you’re a photographer who posts edgy or risqué photos to your Instagram account, you may now see your popularity and view counts plummet. The photo sharing service just announced that it is demoting inappropriate content even if it doesn’t go against the Community Guidelines.

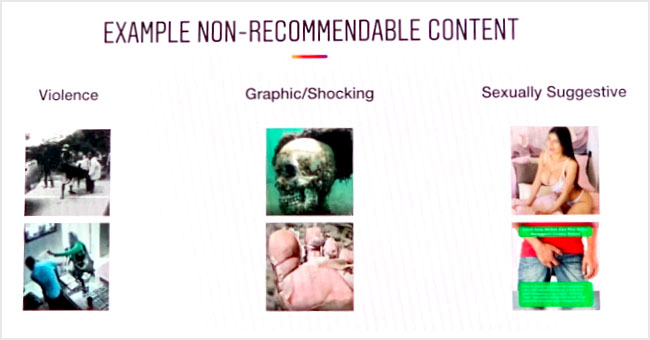

What the new policy means is that even if your photo is “sexually suggestive” but doesn’t actually depict nudity or a sex act, it could be demoted and be hidden from Explore and Hashtags so the broader Instagram community can’t stumble upon it.

Likewise, any photos or videos that are violent, graphic, shocking, lewd, hurtful, spam, misleading, or in bad taste could now see zero public exposure.

Instagram has developed machine learning algorithms to determine whether photos and videos are Recommendable (i.e. visible on Explore and through hashtags), and content moderators are being trained to label borderline content. Non-Recommendable content is now being filtered and Violating content will continue to be removed entirely from Instagram.

If you regularly post “Non-Recommendable” photos, they’ll still be visible to your followers through the feed and in your Stories, but the broad reach you may have once enjoyed will be taken away. And on Facebook, this “borderline content” may be shown much lower in people’s News Feed, something that may also be spread to Instagram’s feed algorithm in the future.