Deepfakes Can Be Detected by Borrowing a Method From Astronomy

AI images and deepfakes can be detected by borrowing a technique from astronomy.

AI images and deepfakes can be detected by borrowing a technique from astronomy.

OpenAI has launched a deepfake detector which it says can identify AI images from its DALL-E model 98.8 percent of the time but only flags five to 10 percent of AI images from DALL-E competitors, for now.

India's Election Commission has urged all political parties to avoid using AI-generated deepfakes in the 2024 election.

Deepfakes of celebrities such as Taylor Swift, Ice Spice, Drake, and even the late Queen Elizabeth are teaching math to kids in viral TikTok videos.

The root cause of the recent sexually explicit AI images of Taylor Swift that went viral has been traced back to the anonymous messaging board website 4chan.

It took reportedly millions of people to view fake explicit photos of pop megastar Taylor Swift, but politicians now have a new reason to take up the fight against deepfakes.

Artificial intelligence (AI) apps that generate nudes of women from images of them fully clothed are on the rise, according to an alarming report.

Minnesota has advanced a bill to criminalize the sharing of sexual and political deepfakes -- as lawmakers across the U.S. rush to combat the rise of artificial intelligence (AI).

The U.S. government is planning to use deepfake videos in online propaganda and deception campaigns.

Last August, artificial intelligence (AI) company Flawless said that it was able to reduce the number of profanities spoken in a movie using deepfake technology but now has shown what that process looks like and how it can even seamlessly alter spoken language.

A deepfake of Meta CEO Mark Zuckerberg thanked Congressional Democrats for failing to take action against the biggest tech companies in an eerily realistic video.

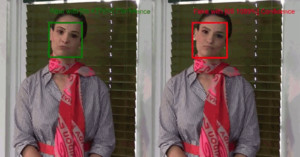

A side-by-side video of Scarlett Johansson and Elizabeth Olsen is challenging the internet to see if they can determine which actress is real and which is a deepfake.

Up until now, AI portraits of people who don't exist have just been headshots. But now, a company has created 100,000 fake humans that have bodies too.

Companies are using photos of fake staff that are generated by artificial intelligence (AI) programs. The pictures are published under the "About Us" section of a company's website to give a false impression of a larger workforce.

Filmmakers used deepfake technology to visually dub the new action-thriller Fall when they were asked to remove the profanities from the film but did not have the budget to reshoot scenes.

Deepfakes are a problem, and we need to talk about the rising issue of fake content on the internet and how some companies are shifting to put some of the blame for what they created on our shoulders. For more, read this week's Clipped Highlights!

The FBI issued a warning that a rising number of scammers are using deepfake technology to impersonate job candidates during interviews for remote positions.

A series of alarmingly convincing videos of what appears to be Margot Robbie have surfaced online. The deepfakes look so much like the real actress that many users have been fooled into believing it is actually her.

Fake videos of Elon Musk created by cyber scammers have been published on YouTube for the purpose of defrauding unsuspecting victims and the video platform has been criticized for failing to tackle the problem.

D-ID, the company whose tech powers the MyHeritage app, has demonstrated a new use for its technology. Called "Speaking Portrait," it allows any photo to be animated with uncanny realism and is capable of saying whatever the user wants.

A team of researchers has put together a new initiative with an available open-source code to help better detect deepfakes that have been edited to remove watermarks with the goal of avoiding the spread of misinformation.

Earlier this year, Hour One debuted its technology for creating fully digital, photorealistic, AI-powered clones for the purposes of content creation, but this week the company is showing another practical use case: in-office digital receptionists.

Update on 3/05/2021: The creator of the deeptomcruise account today revealed that it was a stunt. Masterminded by visual effects expert Chris Ume, the goal was to draw attention to deepfakes and petition for their regulation.

Rosebud.Ai, a company that wants to "disrupt media creation," has created a service called TokkingHeads that will take any still image and turn it into a moving, talking avatar using artificial intelligence.

Hour One describes itself as a "video transformation company" that wants to replace cameras with code, and its latest creation is the ability for anyone to create a fully digital clone of themselves that can appear to speak "on camera" without a camera or audio inputs at all.

Multiple companies including Microsoft and Facebook as well as researchers from The University of Southern California have developed technologies to combat deepfakes and prevent their spread of false media and misinformation. A group of scientists have still managed to fool them, however.

Face tuning apps have thrived for years in the mobile phone ecosystem, allowing users to make subtle (and sometimes not so subtle) changes to their appearance for a selfie-obsessed generation.

Earlier today, Microsoft announced two new tools that will help identify manipulated photos and videos. The first is a metadata-based system, and the second is a "Video Authenticator" that will analyze photos and videos and provide a "confidence score" that tells you whether or not the media has been altered by AI.

Some speculate that overall fake news could cost the economy $39 billion a year. Quite a market to grab for a savvy tech startup, even at 1%! But while fake news and in particular deep fakes have been accused of wreaking havoc on minds and economy, there is surprisingly only a minimal amount of companies offering tools to combat them.

Following hot on the heels of Instagram's new (and at times controversial) "False Information" warning, Twitter has just announced its own policy around labeling and warning users about photos and videos that have been "deceptively altered" and manipulated.