I Fed AI Videos into a Deepfake Detector to See How Well it Can Identify Frauds

A security firm has released a deepfake detection tool as a useful aid to determine whether a piece of content is AI-generated or not.

With so much fake media flooding the internet, there is a real need for a detection tool that can accurately determine if something is synthetic or not. So far, such a tool does not exist.

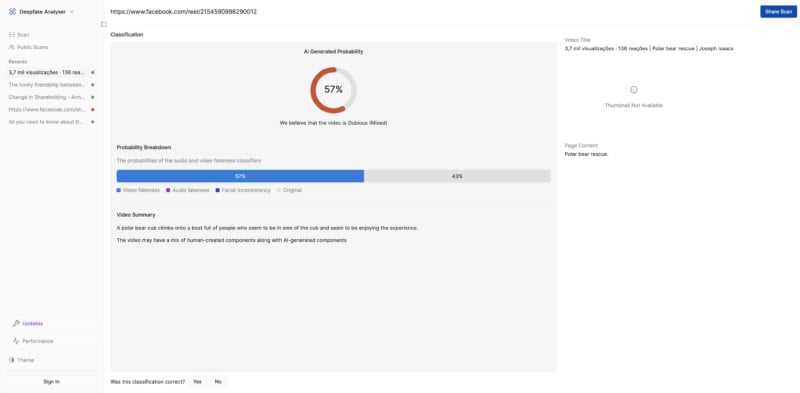

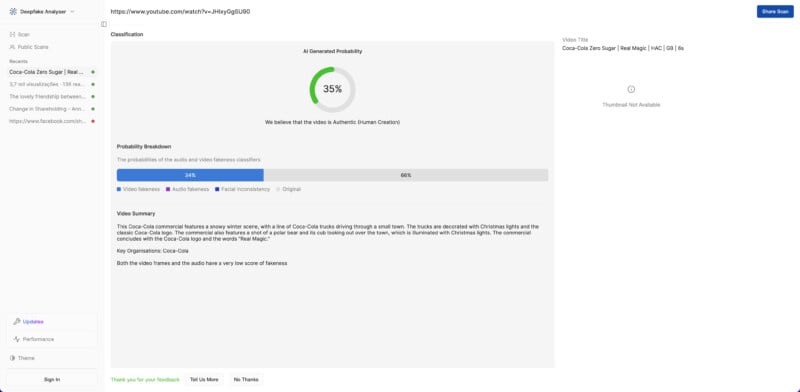

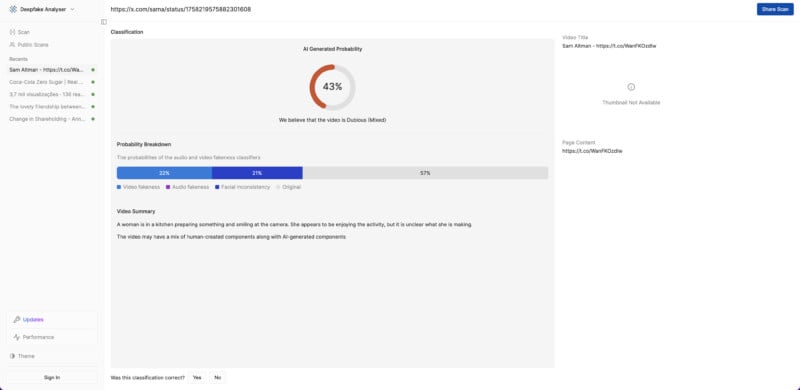

Step forward CoudSEK, an AI-based cybersecurity company that has launched its Deepfake Analyser tool. CloudSEK’s detection tool assesses the authenticity of video frames, searching for inconsistencies that could indicate that AI tools were used.

CloudSEK appears to have put some effort into the tool so I put it to the test to see if it could do a good job. If the detector gives a score of 70 percent or above then it is AI-generated, 40% to 70% is dubious, while anything less than 40% is likely human-made.

First off, I tried a recent AI viral phenomenon: a polar bear cub “being rescued” by fishermen in the Arctic.

![]()

Next up, I fed another AI sensation from this week: Coca-Cola’s recreation of its iconic Christmas ad. The soft drinks giant controversially commissioned an AI reimagining of its festive ad featuring big trucks and Santa Claus. But could CloudSEK’s tool tell if it was AI?

Finally, I fed an AI video of a cook made by OpenAI’s Sora into the deepfake detector to see how well it did.

https://t.co/rmk9zI0oqO pic.twitter.com/WanFKOzdIw

— Sam Altman (@sama) February 15, 2024

Conclusion

Overall, CloudSEK’s deepfake detector has performed admirably, albeit in a brief test. The Coca-Cola ad was always going to be a tough one given it’s made by one of the biggest companies on the planet. But casting aspersions on the other two is a job well done.

Perhaps this could be a useful tool for the less media-savvy among us.