IBM and NASA Create Open-Source AI Model for Analyzing Satellite Data

IBM and NASA have teamed up to develop an open-source, geospatial foundational model that will enable researchers and scientists to utilize artificial intelligence (AI) to track the effects of climate change, monitor deforestation, predict crop yields, and analyze greenhouse gas emissions.

Although best known for space exploration and science, NASA has many Earth science missions. In 2024, NASA’s Earth science operations will produce about 250,000 terabytes of data.

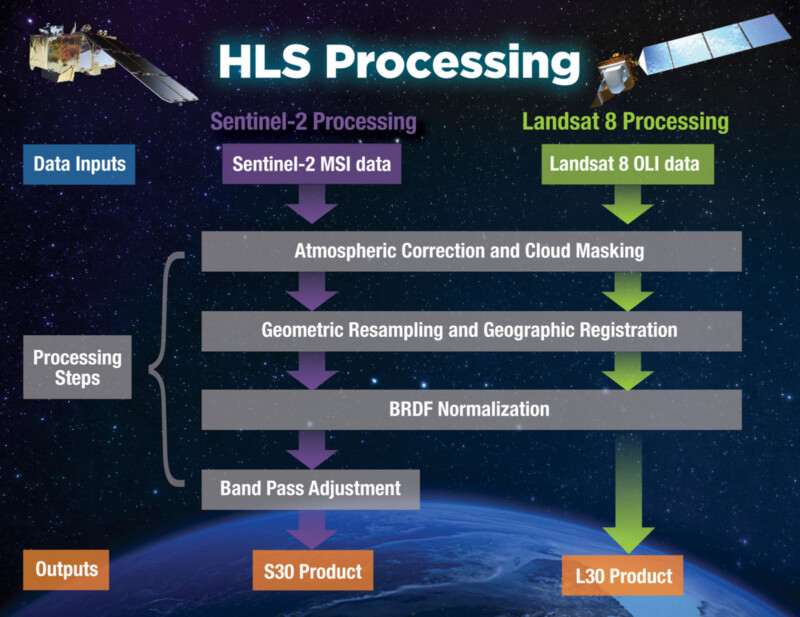

Alongside the European Space Agency (ESA), NASA’s Harmonized Landsat Sentinel-2 (HLS) satellite mission captures extremely high-resolution optical imagery of Earth’s land and coastal regions. The HLS dataset delivers a full view of Earth every two to three days at an impressive resolution of 30 meters per pixel. While HLS images cannot identify individual trees in an area, they can detect large-scale changes.

As As Engadget reports, NASA’s Interagency Implementation and Advanced Concepts Team (IMPACT) was very involved with the work, is a core part of NASA’s Earth Science Data Systems (ESDS) Program, and is crucial to partnerships like the latest one between NASA and IBM. IMPACT is also at the forefront of using technological innovations like AI to make data more valuable and impactful.

“AI foundation models for Earth observations present enormous potential to address intricate scientific problems and expedite the broader deployment of AI across diverse applications,” says Dr. Rahul Ramachandran, IMPACT manager and a senior research scientist at Marshall Space Flight Center in Huntsville, Alabama. “We call on the Earth science and applications communities to evaluate this initial HLS foundation model for a variety of uses and share feedback on its merits and drawbacks.”

IBM has used its cloud platforms, such as watsonx, to apply AI learning models to NASA’s Harmonized Landsat and Sentinel-2 (HLS) data. Watsonx is an AI and data platform “built for business,” but IBM emphasizes the importance of sharing scientific data with everyone.

“Climate change poses numerous risks. The need to understand quickly and clearly how Earth’s landscape is changing is one reason IBM set out six months ago in a collaboration with NASA to build an AI model that could speed up the analysis of satellite images and boost scientific discovery. Another motivator was the desire to make nearly 250,000 terabytes of NASA mission data accessible to more people,” explains IBM.

In the spirit of openness, IBM’s foundational model and NASA’s satellite data are available on the platform Hugging Face. It is the largest geospatial AI foundation model on Hugging Face.

“AI remains a science-driven field, and science can only progress through information sharing and collaboration,” says Jeff Boudier, head of product and growth at Hugging Face. “This is why open-source AI and the open release of models and datasets are so fundamental to the continued progress of AI, and making sure the technology will benefit as many people as possible.”

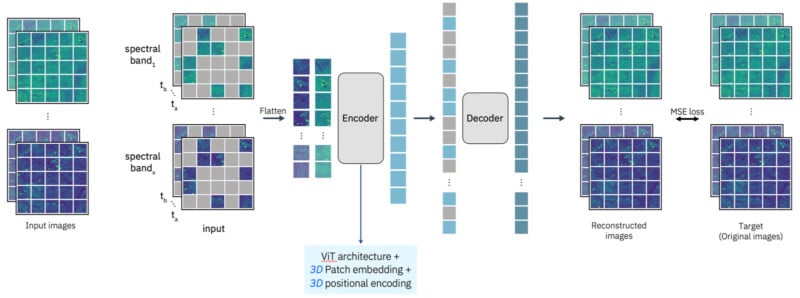

The HLS Geospatial Foundational Model family pipelines are called “Prithvi” on Hugging Face. Prithvi is a “first-of-its-kind temporal Vision transformer pre-trained by the NASA and IBM team on contiguous U.S. HLS data,” NASA says.

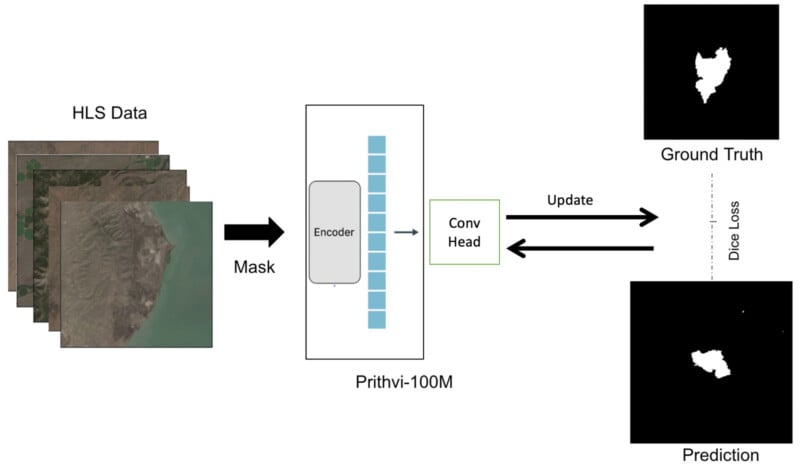

IBM has fine-tuned its model to enable users to map the extent of floods and wildfires in the United States, which may help scientists predict future risk areas. Additional fine-tuning will allow the model to be helpful for a wide range of other tasks.

“Underpinning all foundation models is the transformer, an AI architecture that can turn heaps of raw data — text, audio, or in this case, satellite images — into a compressed representation that captures the data’s basic structure. From this scaffold of knowledge, a foundation model can be tailored to a wide variety of tasks with some extra labeled data and tuning,” IBM explains.

Analyzing satellite data has traditionally been performed by humans, who must annotate certain features in the data. This requires significant time and effort. Foundational models can bypass this by extracting the underlying structure of the data. With development work, foundational models can pick up on very specific structures.

“We believe that foundation models have the potential to change the way observational data are analyzed and help us to better understand our planet,” says NASA Chief Science Data Officer Kevin Murphy. “And by open-sourcing such models and making them available to the world, we hope to multiply their impact.”

NASA and IBM are also working to develop other applications to extract critical insights from Earth observations, including a large language model built on Earth science literature. Given NASA’s open science guidelines and principles, and resulting models and products will be open and available to everyone in the scientific community.