CAI Promises To Deliver More Transparency in AI Generated Images

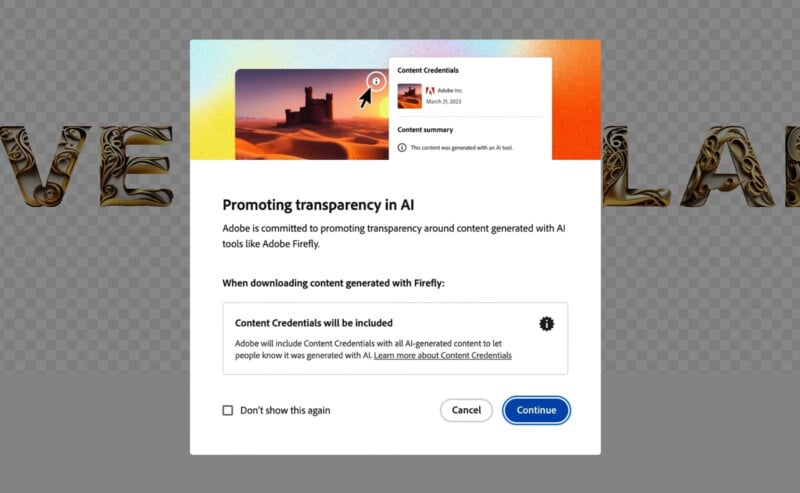

Adobe’s Firefly, a generative text-to-image artificial intelligence (AI) model designed to create images and text effects, launched into beta today and alongside it, the Content Authenticity Initiative (CAI) has new features promoting industry-leading transparency regarding image editing and AI.

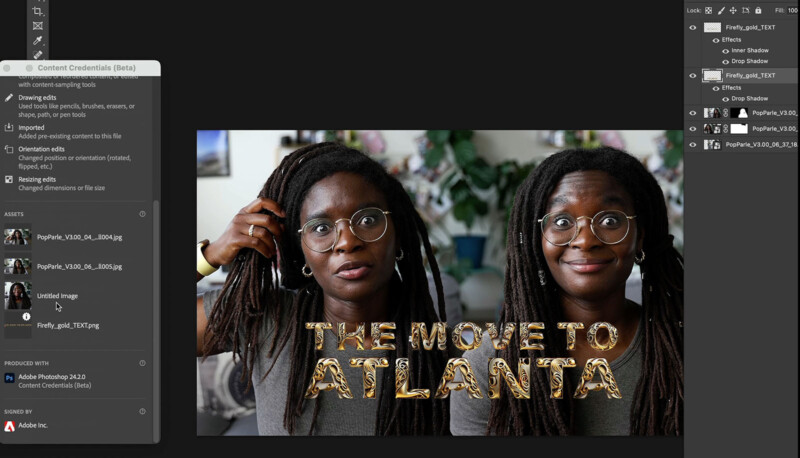

In a new video, digital storyteller Hallease Narvaez showcases Firefly while highlighting Adobe’s Content Credentials feature and its utility for creators.

Using Firefly to create AI-generated text for a video thumbnail, Narvaez shows how the CAI feature in Photoshop lists out the files Narvaez used, all the edits she made, and that she used Firefly’s AI power to create text.

CAI goes even further than that when users take advantage of saving content credentials to the cloud. Users aren’t required to attach credentials to a file itself — which could be removed by a third party — but can instead take advantage of Verify, a tool created by the CAI. The tool, which is in beta now, allows users to inspect images to locate records of how an image on the internet was created.

In Narvaez’s case, she can view all the files used to create her thumbnail, see that AI was used, and view her image’s progression during her editing process. Even if someone screen-captured her thumbnail, inspecting the screenshot and finding the original creation information and editing history would still be possible.

This highlights a critical component of the CAI for creators — ensuring credentials and maintaining authorship. Images are routinely grabbed from different places on the web, modified, and reuploaded elsewhere. CAI includes tools to ensure that it’s possible to track down the source of an image and learn more about its creation.

Generative AI is incredibly powerful and often helpful. However, its use is an important piece of context when viewing content as people should know not only who created what they see but how it has been edited and manipulated before they saw it.

Not only does CAI promise to protect creators, but it also guarantees transparency regarding the use of image editing tools and AI. This is becoming more important as AI becomes more effective at creating images that can fool and mislead people. While AI is useful in the right hands, it can also be nefarious in the wrong ones. The Content Authenticity Initiative wants to enable people to see when and how AI is used to create content.