Three Ways to Spot a Fake, AI-Generated ‘Photo’

Fake, AI-generated photos can range from mostly harmless to disruptive to outright dangerous. It is not an overreaction to say that fake photos have real-world consequences that can even be deadly. Digital forensics expert Professor Hany Farid recently explains how to spot fake AI photos in what he describes as the fight for truth.

At a recent TED Talk, Farid, who is regularly called upon as an expert to help people and organizations determine if a digital photograph is genuine or not, explained some key techniques he and his team use to ascertain the truth of an image.

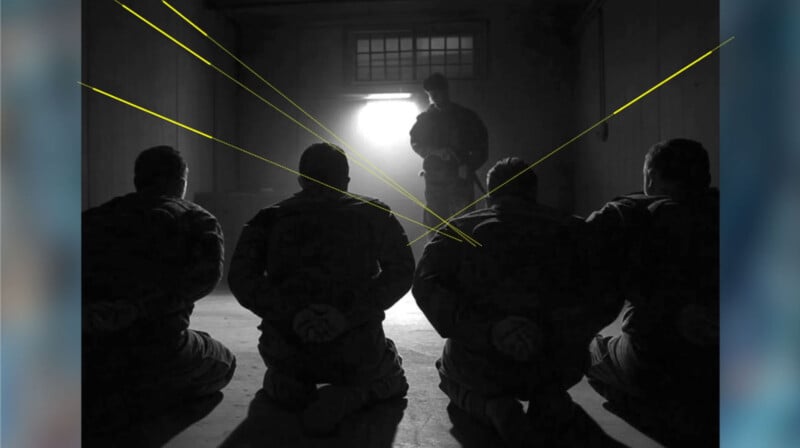

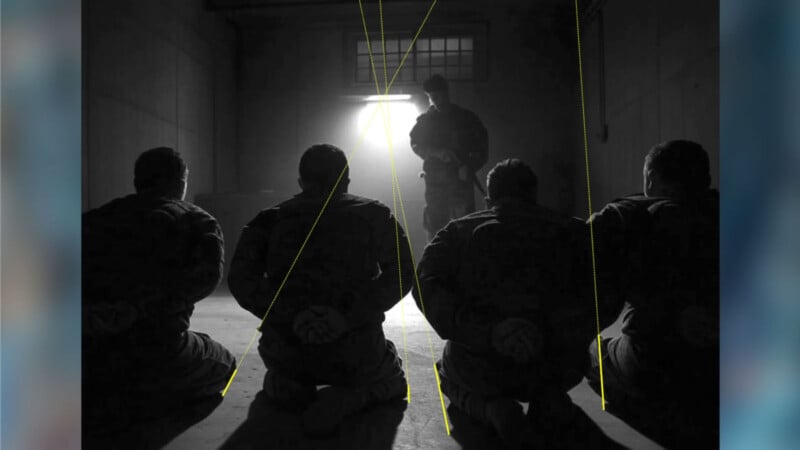

“You are a senior military officer and you’ve just received a chilling message on social media,” Farid explains, as part of an example to demonstrate real-world situations where people need to quickly and accurately determine if a photo is real or not. “Four of your soldiers have been taken, and if demands are not met in the next 10 minutes, they will be executed.”

“All you have to go on is this grainy photo,” Farid continues. There is not enough time to determine if four soldiers are missing. Time is of the essence; the threat is grave. So, what can you do?

“What’s your first move?” Farid asks. “If I may be so bold, your first move is to contact somebody like me and my team.”

Farid, a professor at UC Berkeley, is a trained applied mathematician and computer scientist, which makes him especially well-suited to the science of finding faked photos. He has spent the past 30 years studying and developing methods to authenticate images, ranging from Photoshopped photos to AI-generated content today.

“It used to be, a case would come across my desk once a month,” Farid says. “Then it was once a week. Now, it’s almost every day.”

This massive increase in demand for his expert services is primarily due to the rise of generative AI. However, social media also plays a key role, as it incentivizes the sharing of many images with little regard for the truth.

“We are in a global war for truth,” Farid says. “With profound consequences for individuals, for institutions, for societies, and for democracies.”

While editing photos is not new — 19th-century photographers did their fair share of image manipulation — the ease and speed with which people can create photorealistic images in the age of generative AI are remarkable, and dangerous.

Thanks to the way AI image generators work, Farid can determine whether an image is real or not in three key ways.

The first relies upon residual digital noise. Real digital cameras have specific noise patterns unique to their image sensor and the way that images are captured. AI-generated images, on the other hand, come from AI models that have “learned” how to create images through a lengthy training process. At a basic level, AI models are fed authentic images that have been made noisy and, after billions of ever-improving attempts, the model learns how to turn a noisy image back into a normal-looking one that is semantically consistent with a user’s prompt. AI models work through conditional, responsive denoising.

“And it’s incredible, but decidedly not how an actual photograph is taken,” Farid remarks.

One of the first things Farid and his team consider is whether the residual noise in an image matches the behavior of an actual camera or if it has a pattern consistent with AI-generated photos.

However, no forensic technique is entirely perfect, so Farid and his team also employ other methods, including analyzing vanishing points and shadows.

AI doesn’t understand vanishing points. It generates images through a statistical denoising process and lacks a human-like understanding of geometry and physics. AI-generated photos lack coherent vanishing points.

Farid says shadows have “a lot in common with vanishing points,” too, so he often considers these when doing forensic photo analysis.

“Because AI fundamentally doesn’t model the physics and geometry of the world, it tends to violate these physics,” Farid says.

In his example image of the four alleged hostages, the image exhibits the tell-tale noise pattern of an AI-generated photo, lacks a coherent vanishing point, and includes shadows that do not correlate with real-world physics.

Farid says that while it’s not possible to turn everyone into digital forensics experts overnight, he and his team are developing tools to help journalists and courts more reliably identify fake photos. As Farid mentions, the Content Authenticity Initiative is working diligently to ensure that more photos are authenticated at the time of capture, helping people quickly identify authentic photos using Content Credentials.

There is no quick, 100% accurate method to determine if an image is real or fake that works in every possible situation, but through a combination of approaches, it is possible to identify a fake, AI-generated photo.

Image credits: TED Talk, Hany Farid