Ideogram 2.0 Dangerously Lets You Generate Photorealistic AI Images of Anything

There is a widely held belief that AI images pose a danger to society. The technology is able to create fake images that can pass as genuine creating opportunities for bad actors to spread misinformation.

Step forward Ideogram, a startup that’s raised $16.5 million, and its latest model, Ideogram 2.0, which promises to “create images that can convincingly pass as real photos.”

However, unlike most AI image generators which have safeguards in place, Ideogram 2.0 has seemingly no restrictions on creating photorealistic images of real people in compromising positions.

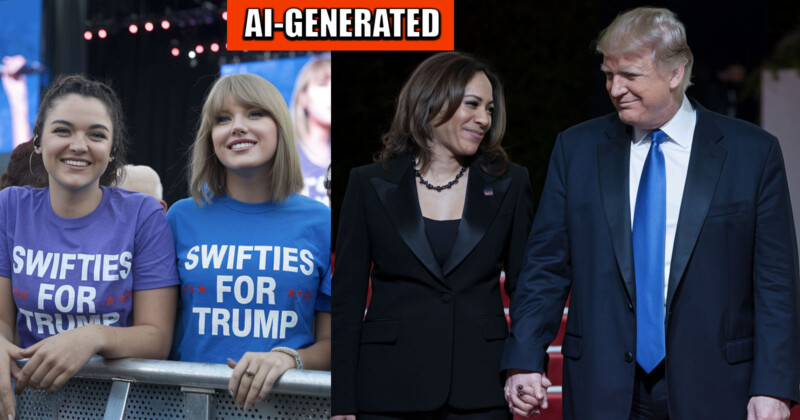

Within a few seconds of signing up, PetaPixel was able to create photorealistic images of Kamala Harris and Donald Trump holding hands as well as Taylor Swift fans wearing “Swifties for Trump” t-shirts.

![]()

![]()

When given the exact same prompts, Midjourney rejects these image generations. Even Elon Musk’s Grok image generator has been toned down after its initial chaotic launch.

Introducing Ideogram 2.0 — our most advanced text-to-image model, now available to all users for free.

Today’s milestone launch also includes the release of the Ideogram iOS app, the beta version of the Ideogram API, and Ideogram Search.

Here’s what’s new… 🧵 pic.twitter.com/nvD0ogRh2J

— Ideogram (@ideogram_ai) August 21, 2024

Ideogram, a Canadian startup, makes no bones about its capabilities in its marketing literature.

“The Realistic style of Ideogram 2.0 enables you to create images that can convincingly pass as real photos,” reads the announcement for Ideogram 2.0. “Textures are significantly enhanced, and human skin and hair appear lifelike.”

![]()

![]()

As well as creating fake, photorealistic images, Ideogram also trumpets its ability to generate legible text. It pretty much nailed the “Swifties for Trump” t-shirts which has been a hot topic of debate this week after Donald Trump shared very similar AI-generated images in a social media post that appeared to suggest Taylor Swift and her fans were endorsing him.

With the presidential elections just around the corner, releasing a powerful tool like this with no safeguards that can be so obviously manipulated by bad actors is idiotic, reckless, and downright dangerous.

As of publication, Ideogram did not respond to PetaPixel’s request for comment.

Irresponsible AI

There’s no doubt that Ideogram 2.0 is an impressive tool. Most people who use it will presumably do so in good faith people and create benign AI art.

However, it is glaringly obvious that an unrestrained AI image generator could be used for malicious means.

And it’s not just Ideogram, yesterday PetaPixel editor-in-chief Jaron Schneider wrote about the AI tools on Google’s Pixel 9 Pro.

“Google doesn’t appear to be adding any level of transparency that AI was used to create these images,” he writes about the phone’s new Magic Editor which allows people to manipulate real photos by using AI to generate new objects and elements.

There is a clear and urgent need for the nascent AI image industry to move responsibly and transparently. At the very least, these tools should have some guardrails.

Image credits: All images generated from Idegram 2.0