Adobe’s Magic Fixup is an AI Cut-and-Paste Photo Editor Trained on Videos

![]()

Although Adobe routinely releases new artificial intelligence features in its software, the company’s research division is always working hard on technological breakthroughs, long before related features make it into consumer-facing software. Adobe Research’s latest creation is Magic Fixup, which automates complex image editing tasks.

While photo editing tools, including AI ones, are typically trained using still frames, or photos, Adobe’s engineers believe photo editing tools could perform better when trained not on photos, but video content.

“Our key insight is that videos are a powerful source of supervision for this task: objects and camera motions provide many observations of how the world changes with viewpoint, lighting, and physical interactions,” the researchers explain on a GitHub page for Magic Fixup.

As Venture Beat writes in its coverage, this “novel method” enables the new AI technology to understand better how “objects and scenes change under varying conditions of light, perspective, and motion.”

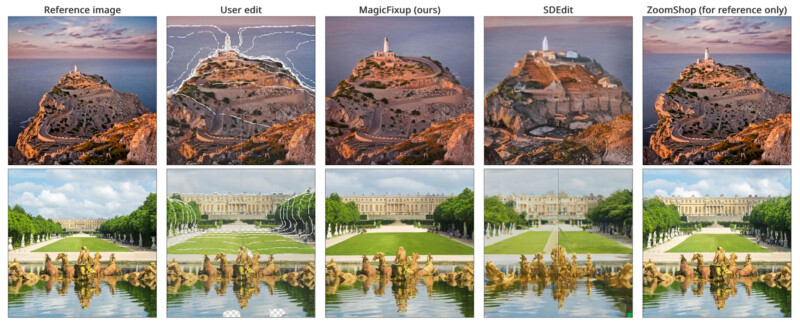

The team calls image editing a “labor-intensive process.” It claims that while human editors can easily rearrange parts of an image to compose a new one, edits can look unrealistic, especially when the object has been moved to an area where the prevailing lighting conditions on that object no longer make sense. Suppose a person takes a picture of a home interior being side-lit by a window. If they move a piece of furniture from the brighter side to a darker area of the room, the object’s lighting will not make sense in its new location.

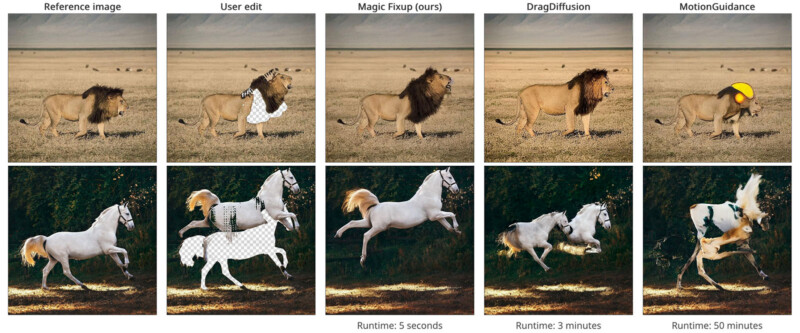

It is one thing to move objects around in an image, but another to make them look like they fit in the new spot. That is where Magic Fixup hopes to shine.

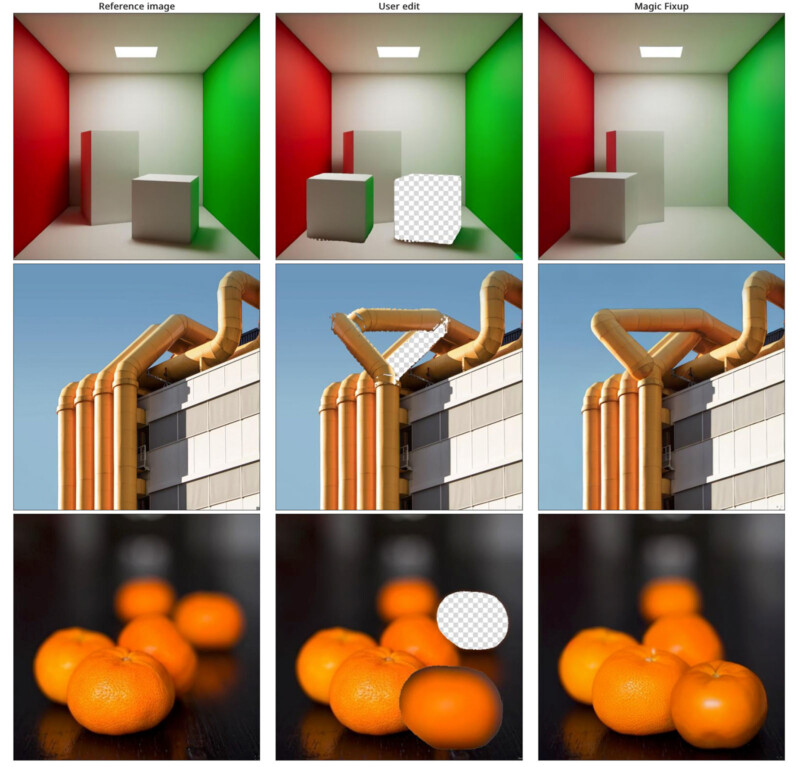

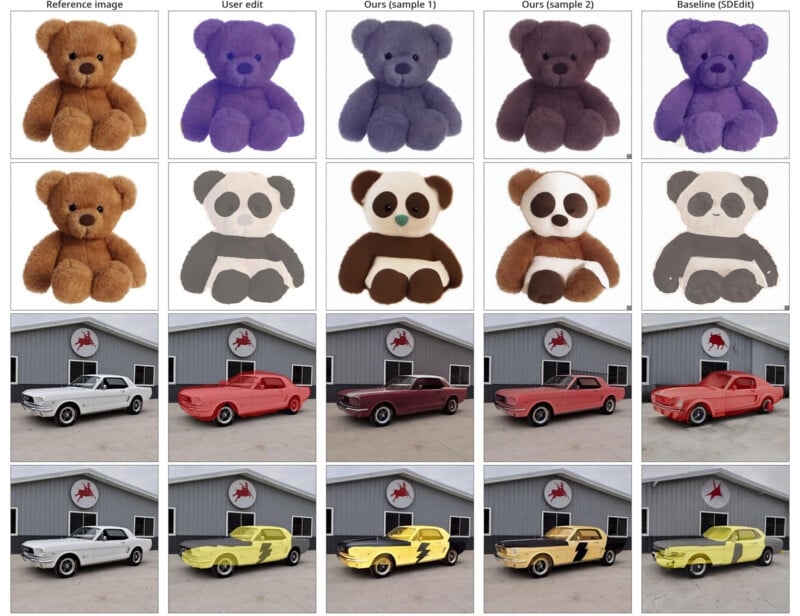

The team has built a user interface for its tool that can live within a developer version of Photoshop. The user can essentially cut out the object they want to change and paste it where they want it to go. Magic Fixup interprets the user’s cut-and-paste operation to recreate the image.

The researchers explain that videos offer helpful information concerning how objects exist in the real world, including in response to changing conditions. How it precisely works is very sophisticated and beyond the scope of this article. However, the basics are that the Magic Fixup pipeline relies on two different diffusion models that operate simultaneously.

One pipeline handles the reference image, pulling out the required detail for recreation, while the other synthesizes the user’s coarse edit and the details from the reference image.

The results, while in early development, are very impressive. Experimental data shows that most users prefer Magic Fixup’s results to those of contemporary competing models. There are limitations, as the team admits its model has trouble with hands, faces, and small objects, but the results show that, at least in some situations, Magic Fixup can perform as well as a human editor in a fraction of the time.

The complete research is detailed in a research paper, “Magic Fixup: Streamlining Photo Editing by Watching Dynamic Videos.” The research has been conducted by Hadi AlZayer, Zhihao Xia, Xuaner Zhang, Eli Shechtman, Jia-Bin Huang, and Michael Gharbi. The researchers work for Adobe and the University of Maryland.

Image credits: Adobe Research, University of Maryland. AlZayer, Xia, Zhang, Shechtman, Huang, Gharbi