Voice-Activated Backpack Aids the Visually Impaired with AI Cameras

Artificial intelligence developer Jagadish K. Mahendran and his team at the University of Georgia have designed an AI-powered, voice-activated, camera system that can help the visually impaired navigate common challenges such as traffic signs, hanging obstacles, and crosswalks.

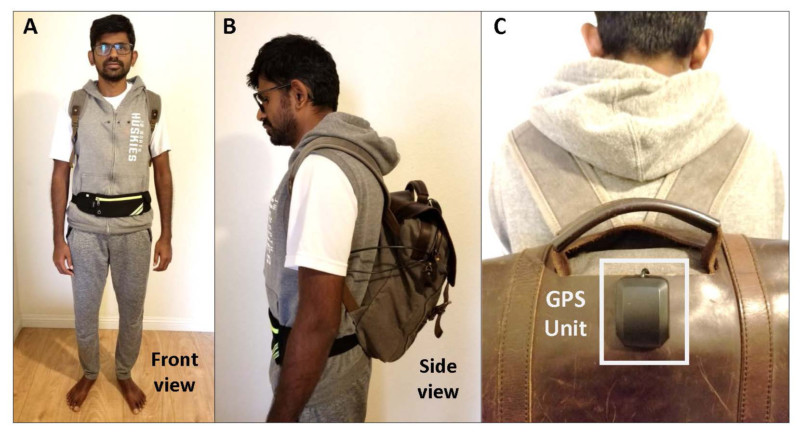

The system is all housed within a small backpack that contains most of the computing unit, which in this case was a laptop, and is connected to a camera and a fanny pack that contains a battery that allows for approximately eight hours of continuous use. The aforementioned camera is a Luxonis OAK-D spatial AI camera and can be affixed either to the vest or the fanny pack. In this particular case, three tiny holes in the vest provide the viewports for the camera which is affixed to the inside of the vest.

According to a press release, the OAK-D unit is a versatile and powerful AI device that runs on Intel Movidius VPU and the Intel Distribution of OpenVINO toolkit. It is capable of running advanced neural networks while providing accelerated computer vision functions and a real-time depth map from its stereo pair, as well as color information from a single 4K camera.

As reported by Engadget, the system communicates with the wearer via a bluetooth earpiece, which can be used to issue queries and commands to the AI that can then respond with verbal information.

“As the user moves through their environment, the system audibly conveys information about common obstacles including signs, tree branches, and pedestrians,” Mahendran writes in a case study. “It also warns of upcoming crosswalks, curbs, staircases, and entryways.”

This system is meant to be able to replace a seeing-eye dog and would allow the visually impaired to walk more safely on city sidewalks, better avoid obstacles, be better informed on the presence of traffic and street signs, and be are of and — perhaps more importantly — stop at crosswalks.

“For example, if the user is walking down the sidewalk and is approaching a trash bin, the system can issue a verbal warning of ‘left,’ ‘right,’ or ‘center,’ indicating the relative position of the receptacle,” Mahendran continues. “When the user is approaching a corner, the system will describe what’s ahead by saying ‘Stop sign’ or ‘Enabling crosswalk,’ or both. Similarly, if the user is approaching an area where bushes or branches overhang the sidewalk, the system will issue a notice such as ‘Top, front,’ warning of something in the way.”

The research team argues that based on what they have shown the system to be able to do, there is little doubt that this will be the beginning of many more advanced versions soon to follow. As this continues to evolve, it should have a profound and liberating effect on many visually impaired people.

AI has shown to be highly valuable in assisting the impaired in the past, including allowing a blind man to become a photographer. In the spirit of continuous development, this particular project will be open-sourced and the entire project will be published as a research paper in the near future.