Google Trains an AI to Geotag a Photo Just by Looking at the Pixels

![]()

Geotagging is usually done using a built-in GPS system, but in the future it might be possible to figure out where a photo was shot just by showing the picture to an artificial intelligence program.

Google computer vision scientist Tobias Weyand and a couple of his colleagues have trained a deep-learning computer to figure out the location where photos were captured using only the contents of the image (i.e. the pixels, not the metadata).

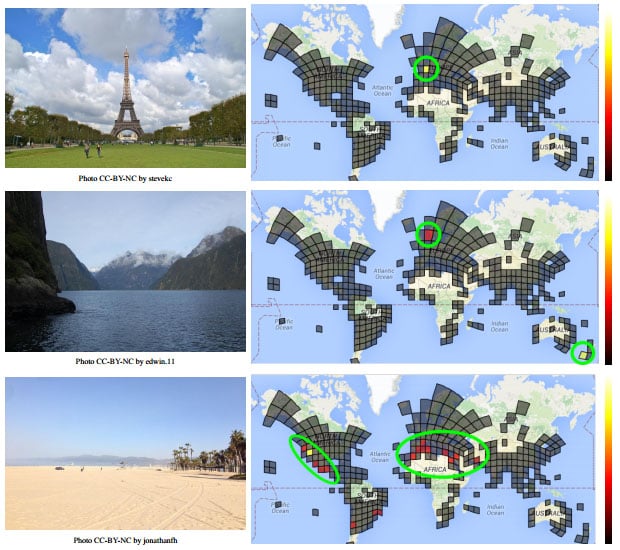

Here’s how the system works: first, the team turned the world into a grid of 26,000 squares. How big those areas are depends on how many photos are captured in those places. Places were photos usually aren’t taken (the poles and the oceans) are ignored, less popular places have larger areas, and photo hot spots have tiny squares in the grid.

![]()

The scientists then gathered together 126 million geotagged photos from the Internet and created a massive database with those images placed on their special grid.

Using 91 million photos from that database, the scientists trained a neural network (i.e. a fancy artificial intelligence system) to figure out which square any image should go in based on what’s in the image. They checked the accuracy of the system using the other ~35 million photos.

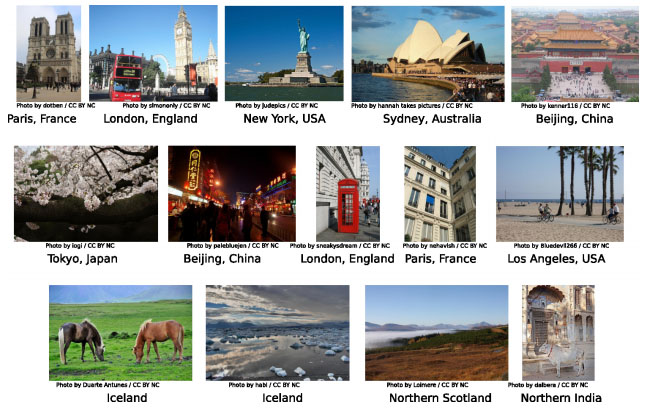

Finally, after building and verifying their system, the team gathered 2.3 million geotagged photos from Flickr and fed them one-by-one into their AI system. The results were impressive: 3.6% of the photos were correctly geotagged with street-level accuracy. 10.1% were located with city-level accuracy. 28.4% were placed in the right country, and 48.0% were put in the right continent.

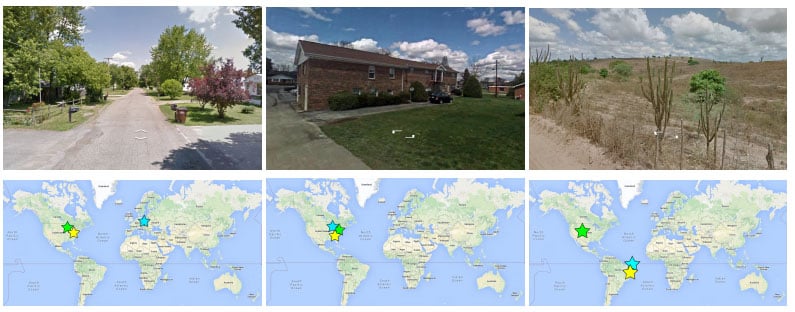

To see how the system stacks up against real humans, the team built an online “game” called GeoGuessr that challenges you to identify the location of a random Street View photo.

![]()

In tests with 10 human players, PlaNet won the challenge 28 of the 50 rounds. It was off by a median distance of 1131.7km, while humans were about 2320.7km off.

And that’s just the beginning. By continuing to refine the system using an ever-increasing database of photos being shared online — the initial system was trained with only 377MB of photos — we may soon find accurate automated GPS-less photo geotagging systems are regular part of our photo sharing world.

(via Google via MIT Technology Review)