Google Adds Cinematic Controls, Outpainting, and Inpainting to AI Video Model Veo 2

Google is rolling out a series of cutting-edge updates aimed at enhancing creative workflows with AI, including a significant upgrade to its video generation model, Veo 2.

The new features — available for preview through Google Cloud’s Vertex AI platform — are designed to give users more control over cinematic style and editing in both AI-generated and real-world footage.

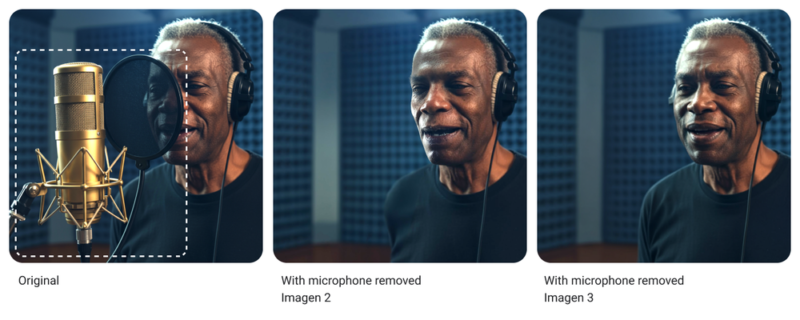

Google Veo 2, developed by Google DeepMind, is introducing inpainting and outpainting tools. Inpainting enables automatic removal of “unwanted background images, logos, or distractions from your videos,” Google says per The Verge.

Outpainting expands the original video’s frame, filling in the new areas with AI-generated footage that matches the look and feel of the source material. Outpaining is like Adobe’s Generative Expand feature for images and inpainting is like Generative Fill.

The outpainting feature could be used to alter a video into a different aspect ratio, helpful for creators posting on different platforms.

The update also sees the introduction of cinematic presets. These allow users to apply stylistic techniques such as timelapse, drone-perspective shots, or simulated camera pans by simply tagging them in their text prompts. Google shared the below example of a “pan right” instruction.

Veo 2 also debuts a new interpolation feature that generates smooth transitions between two still images, filling in intermediate frames to produce a continuous video segment. Example below.

The updates are positioned to compete with Adobe’s Firefly video model, which recently brought similar generative capabilities to Premiere Pro.

The Verge article carries an eyebrow-raising quote from Justin Thomas, who is the digital experience lead at Kraft Heinz. He says that the tools are enabling his corporate team to carry out tasks in eight hours that “once took us eight weeks.”

Audio has also got a new shine. Google’s music generation model, Lyria, is now in private preview. Meanwhile, its speech model, Chirp 3, gains a major upgrade with the launch of an “Instant Custom Voice” feature. This allows the system to generate “realistic custom voices from 10 seconds of audio input.” A new transcription feature is also being previewed, which can distinguish between individual speakers in multi-person conversations—providing cleaner, clearer transcripts.