Tesla Autopilot Car Drove Into a Giant Photo of a Road

Famous YouTube creator and former NASA engineer Mark Rober, who provides his 65 million subscribers with scientific entertainment videos, put Tesla’s vision-based safety features up against a LiDAR system, and the results, although arguably flawed, highlight the limitations of vision-based autonomous vehicle systems.

Before getting into Rober’s video, what it shows, and what it doesn’t, it’s important to contextualize the different autonomous vehicle technologies discussed. First of all, there are six levels of vehicle autonomy, ranging from level zero (no automation whatsoever) to level five (complete driving automation in all conditions at all times with zero driver attention or interaction required). Each jump from one level to the next requires significant technological advancement, and right now, Tesla’s current commercially available cars are classified as level two, meaning that they offer partial vehicle automation. Tesla vehicles can control steering and acceleration (and deceleration) autonomously, although there must always be an alert driver in the driver’s seat.

All Tesla vehicles built for the North American market feature multiple external cameras and vision processing to perform its various functions, including Autopilot (traffic-aware cruise control and automatic steering) and supervised self-driving (navigation, automatic lane changes, automatic parking, summoning, traffic and stop sign control).

All autonomous vehicles — and none available to the public are level five yet, by the way — require some camera system to see the world around them and processing to turn that visual data into safe action.

While some autonomous vehicle makers celebrate LiDAR (light detection and ranging) technology for its superior three-dimensional mapping performance in challenging situations, Tesla claims vision-based systems with traditional optical cameras are better overall because they can better determine precisely what objects exist near the vehicle and improve over time using artificial intelligence. Plus, vision-based camera systems are cheaper, which car makers always like.

In Mark Rober’s new video, he pits a Tesla against a LiDAR-equipped vehicle. As Forbes notes, Rober uses an older version of Tesla’s “Autopilot” system in his testing, which, despite Tesla calling it “supervised full self-driving,” is not an entirely self-driving vehicle system. Again, Tesla is at level two.

In any event, Rober attempted to see if a photo wall of an open road could fool Tesla’s vision-based system. Would the Tesla believe the wall was real and drive straight through it, Wile E. Coyote style? Yes.

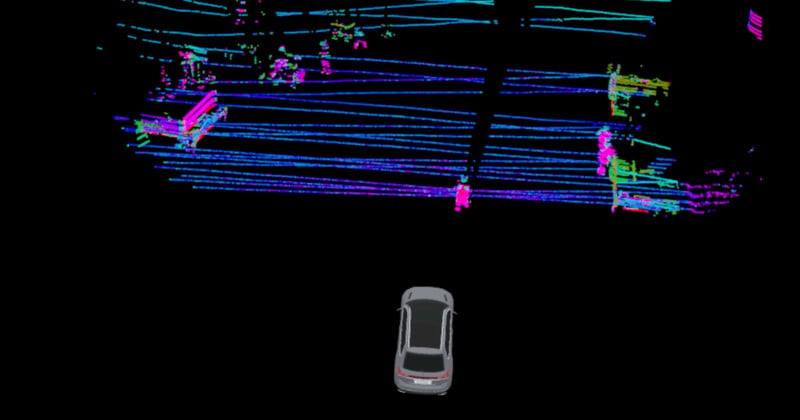

But before getting to that, Rober demonstrated how LiDAR cameras work by secretly bringing a LiDAR camera onto Disney’s famous and notoriously secretive Space Mountain ride. The ride occurs in near-pitch darkness, and riders don’t know what is happening around them. LiDAR fixes that since it images the world around it by emitting laser pulses and measuring how long it takes for the reflected light to return to the camera. While this offers no insight at all about what the LiDAR camera “sees,” it can provide a detailed 3D map in complete and total darkness, which is precisely why some autonomous car companies believe it is essential to include on their cars.

While Tesla has openly criticized LiDAR camera systems because they are “dumb” and unable to identify objects, LiDAR can detect an object in the road even if that object looks like more road, which is why an autonomous car equipped with Luminar’s LiDAR camera technology (https://www.luminartech.com/) did not get fooled by an ACME-inspired photo wall and the Tesla did. LiDAR is also able to handle situations that a vision-based camera alone struggles with, such as extreme fog.

There is no way to know if Tesla’s most recent system would have performed better. Still, critics of pure vision-based autonomous vehicle systems have routinely noted that the system is vulnerable to visual spoofing and major performance defects in adverse conditions.

![]()

It also brings into question how a solely vision-based system would ever entirely overcome the risk of visual spoofing. While it’s not apparent that the risk of visual spoofing is a real one — who is putting up photo walls on roads? — Rober’s testing raises interesting questions concerning how to safely make a fully autonomous vehicle and whether such a vehicle would require a combination of different imaging technologies, including vision, LiDAR, and even radar.

While the threat of running into a photo wall may be low, driving in unpredictable and often challenging, complicated scenarios is not, and level four and five autonomous cars will need to be equipped with some seriously safe and sophisticated camera technology to handle everything the real world has to throw at them.

Image credits: Mark Rober