Sphere Studio’s 18K Big Sky Sensor Tech Has Been Revealed

![]()

The Big Sky Camera that powers the spectacular visuals at Sphere, including Oscar-nominated filmmaker Darren Aronofsky’s new film, Postcard From Earth which is playing exclusively at the Las Vegas Sphere, has been detailed in a recent scientific article.

As seen on YM Cinema, a paper about the sensor in Big Sky published on MDPI offers incredible technical information about the massive 316-megapixel HDR image sensor inside the state-of-the-art cinema camera.

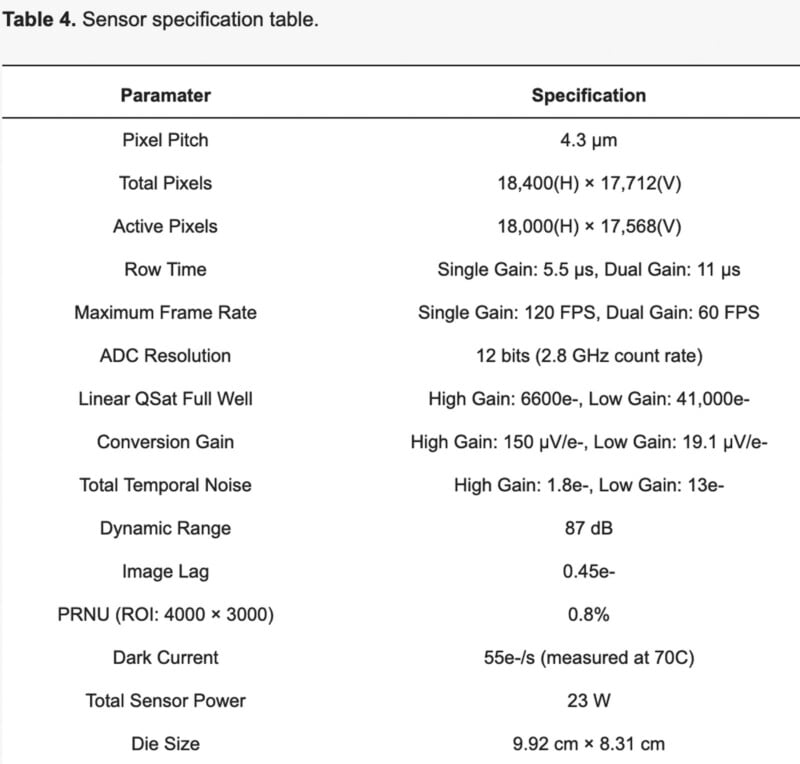

“We present a 2D-stitched, 316MP, 120FPS, high dynamic range CMOS image sensor with 92 CML output ports operating at a cumulative data rate of 515 Gbit/s. The total die size is 9.92 cm × 8.31 cm and the chip is fabricated in a 65 nm, four-metal BSI process with an overall power consumption of 23 W. A 4.3 µm dual-gain pixel has a high and low conversion gain full well of 6600e- and 41,000e-, respectively, with a total high gain temporal noise of 1.8e- achieving a composite dynamic range of 87 dB,” writes the sensor’s maker, Forza Silicon (AMETEK Inc.) in Pasadena, California, and Sphere Entertainment Co.

Typically, extremely high-resolution video is created by stitching together multi-camera array video. This is similar to how photographers stitch multiple images to create large panoramic photos. However, stitching video is more complex because there are not only many frames (almost always at least 24 frames per second), but the motion inherent in video creates artifacts like ghosting, blur, and discontinuity due to parallax.

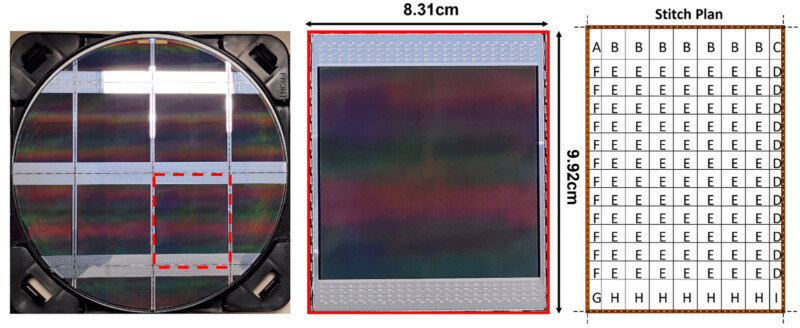

To overcome these issues, Forza Silicon and Sphere Entertainment stitched images at the sensor level, creating a huge 2D-stitched 316-megapixel CMOS image sensor that captures 18K x 18K video. Stitching together multiple image sensors is common for applications that demand extreme resolution, although it does create complexities at the engineering level.

“As with any stitched design, it is important to co-design the sensor architecture along with the stitch plan to account for the required repetition of large areas of the design during stitching [6,7]. Because of the extreme physical size of this design, and thus the large amount of repetition, there was an unusually complex planning process to make sure the architecture and stitch plan satisfied all of the mechanical, optical, electrical, and process requirements,” the paper explains.

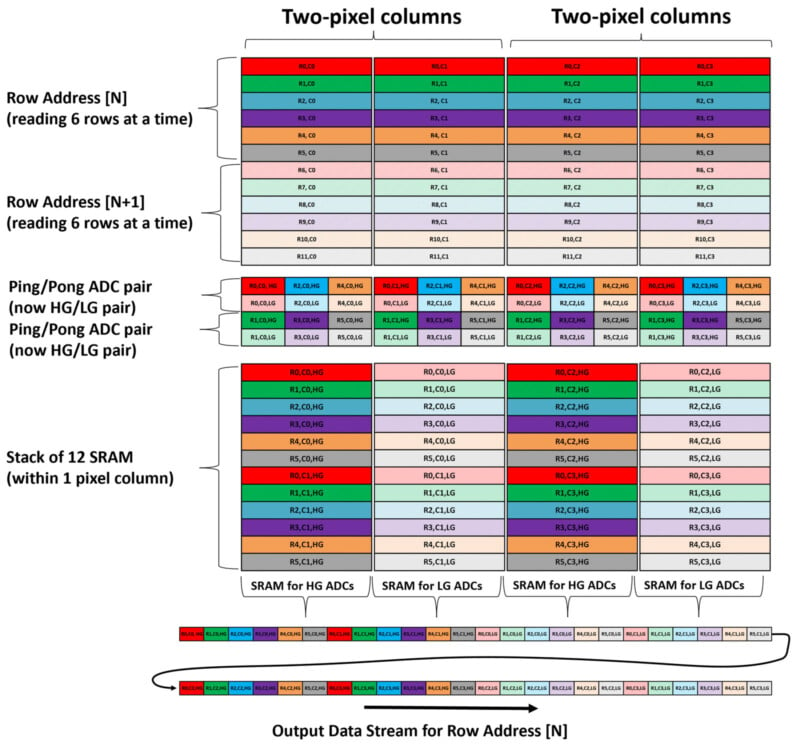

The paper also details that the 316-megapixel rolling shutter sensor has different modes to fit the needs of filmmakers. A high-frame-rate single-gain readout mode is available alongside an HDR mode that shoots at a reduced frame rate.

The single-gain mode can record full-resolution video at up to 120 frames per second, while the dual-gain mode, used for single-exposure HDR imagery, is capped at a still-quick 60 fps. Aronofsky’s latest film is presented at a 60p frame rate.

Of the dual-gain mode, the paper explains, “Whereas the single-gain mode uses the ADC array in a pipelined ping-pong-type operation, the dual-gain mode re-configures the ADC array such that the ‘ping’ ADCs sample the high-gain pixel readout and the ‘pong’ ADCs sample the low-gain pixel readout, and all ADCs convert at once. This effectively doubles the readout time in this mode and thus halves the frame rate.”

On paper, this sounds similar to the dual output gain functionality of a camera like the Panasonic GH6, Canon EOS C300 Mark III, and what Arri uses in its Alexa family of cinema cameras.

The massive sensor’s dynamic range is listed as 87 dB. If a stop of dynamic range, like how photographers often think of DR, is equal to six decibels, the sensor’s dynamic range is 14.5 stops, which aligns with what YM Cinema writes.

The paper also outlines some key specifications of the image sensor, including that it has a 4.3 µm pixel pitch, active pixels of 18,000 x 17,568, and operates with 12-bit ADC precision. PetaPixel already knew the sensor’s overall size, which is a remarkable 77.5 x 75.6 millimeters. For contrast, a full-frame image sensor is 36 x 24 mm. The paper reveals that the sensor’s die size is 99.2 x 83.1 mm.

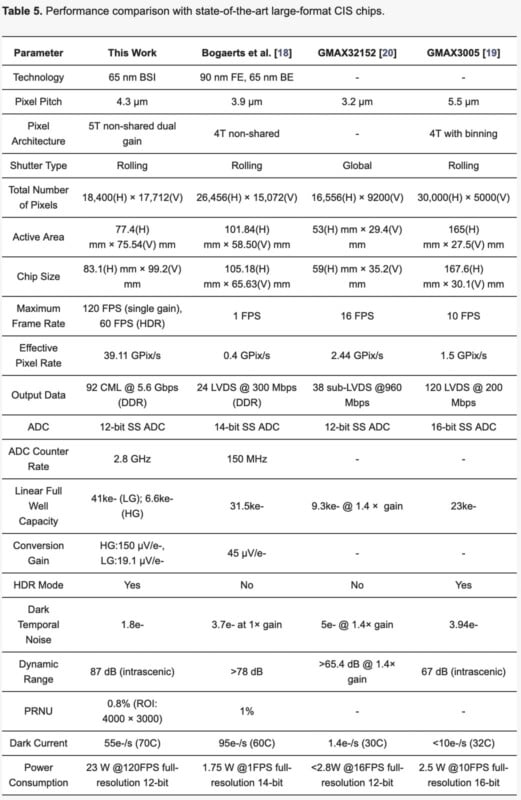

The scientific paper also includes a specs comparison chart comparing the new image sensor in Big Sky against other state-of-the-art large-format chips, including the GMAX32152 and GMAX3005. The GMAX3005 has a larger pixel pitch (5.5 µm) but is limited to a maximum speed of 10 frames per second. The GMAX32152, which employs a global shutter, can shoot at 16 fps but has a smaller pixel pitch.

The Big Sky’s sensor delivers the broadest dynamic range, the fastest frame rate (by a lot), and the second-largest recording area, just behind the 391-megapixel CMOS sensor developed by Bogaerts et al. that is made for airborne mapping applications.

As Aronofsky explained in a recent segment on Late Night with Seth Meyers, there are significant challenges to working with a large-format camera like Big Sky. It is remarkably sophisticated hardware, and its custom-built software is equally cutting-edge.

The full paper, authored by Abhinav Agarwal, Jatin Nasrani, Sam Bagwell, Oleksandr Rytov, Varun Shah, Kai Ling Ong, Daniel Van Blerkom, Jonathan Bergey, Neil Kumar, Tim Lu, Deanan DaSilva, Michael Graee, and David Dibble, includes many more technical details, figures, and charts outlining precisely how the Big Sky’s groundbreaking image sensor operates in its various modes.

Update: The article has been updated to further emphasize that Forza and Sphere Entertainment co-developed the image sensor in the Big Sky Camera.