Report Says China is Using AI Images to Spread Disinformation in the US

Microsoft analysts have warned today that Chinese operatives are creating AI images intended to spread disinformation among voters in the U.S., targeting divisive political issues.

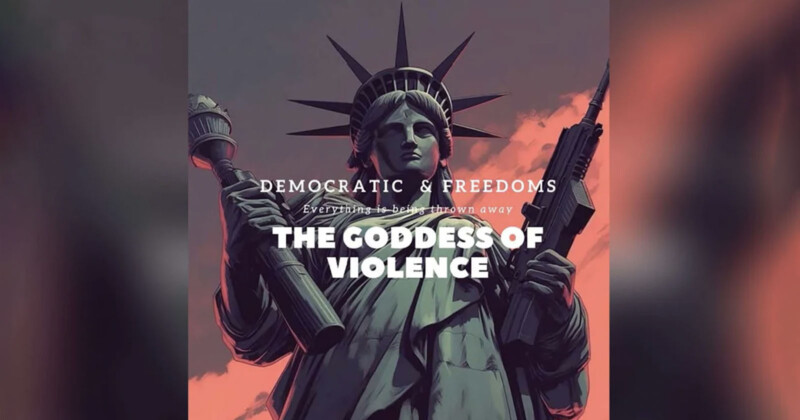

The AI images mimic what Americans might post online. In a report, Microsoft provided examples of the suspected AI images, including one showing the Statue of Liberty with the words “Everything is being thrown away” and an image referencing the Black Lives Matter movement.

The alleged propaganda is dispersed on social media platforms that are headquartered in the U.S., sites like Twitter (now X) and Facebook. Microsoft says they have seen examples of real people reposting the AI images from accounts that are “affiliated” with the Chinese Communist Party.

“We can expect China to continue to hone this technology over time and improve its accuracy, though it remains to be seen how and when it will deploy it at scale,” says Clint Watts, general manager of Microsoft’s Digital Threat Analysis Center.

The Microsoft report says that AI images draws “higher levels of engagement from authentic social media users” than previous attempts.

However, Liu Pengu, spokesperson for the Chinese Embassy in Washington, D.C., tells CNN that the accusations are baseless.

“In recent years, some Western media and think tanks have accused China of using artificial intelligence to create fake social media accounts to spread so-called ‘pro-China’ information,” says Pengyu. “Such remarks are full of prejudice and malicious speculation against China, which China firmly opposes.”

Google Will Require Disclaimers for AI-generated Political Ads

Meanwhile, Google says it will require political ads generated with AI to be prominently disclosed. Bloomberg reports that from November, Google’s advertisers will be forced to disclose when election ads feature “synthetic content” that depicts “realistic-looking people or events.”

Google wants these ads to contain a “clear and conspicuous” label stating things like “This audio was computer generated” or “This image does not depict real events.”

Image credits: All images from the Microsoft Threat Intelligence report.