This Robot Camera Can Film ‘How-To’ Tutorial Videos

Researchers from the University of Toronto have created an intuitive robot camera designed to film “How-To” tutorial videos for YouTubers and video creators.

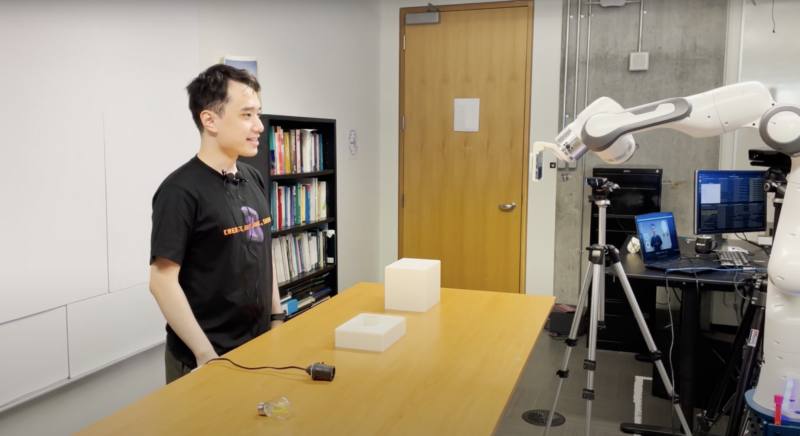

Dubbed “Stargazer,” the interactive camera follows implicit and explicit cues from the person in front of the camera allowing creators who don’t have access to a film crew to create more dynamic videos than if they were just filming on a static camera.

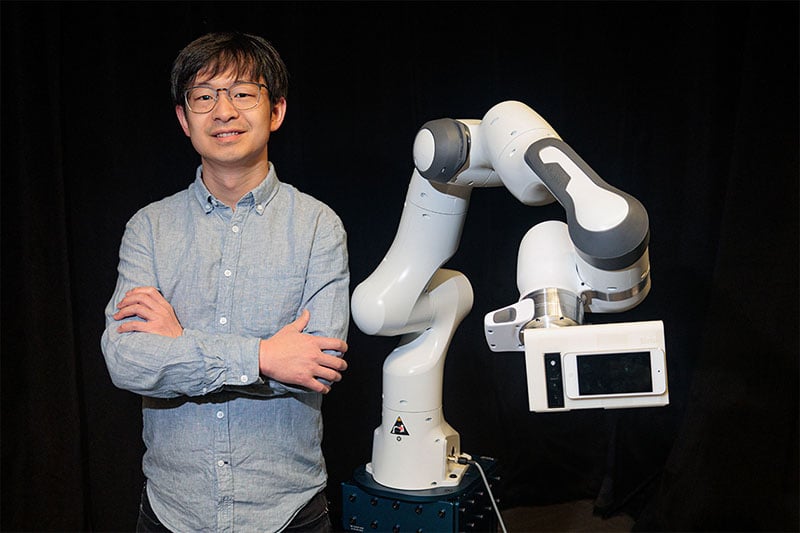

“The robot is there to help humans, but not to replace humans,” explains lead researcher Jiannan Li, a Ph.D. candidate in the University of Toronto’s Department of Computer Science. “The instructors are here to teach. The robot’s role is to help with filming — the heavy-lifting work.”

In a published paper, researchers explain that Stargazer uses a single camera on a robot arm armed with seven independent motors that allows it to track regions of interest.

The system’s camera behaviors can be adjusted based on subtle cues from instructors, such as body movements, gestures, and speech that are detected by the prototype’s sensors.

The instructor’s voice is recorded with a wireless microphone and sent to Microsoft Azure Speech-to-Text, a speech-recognition software. The transcribed text, along with a custom prompt, is then sent to the GPT-3 program, a large language model which labels the instructor’s intention for the camera.

The researchers say that the video-makers need not worry about guiding the robot as the clever machine can interpret natural speech.

“For example, the instructor can have Stargazer adjust its view to look at each of the tools they will be using during a tutorial by pointing to each one, prompting the camera to pan around,” Krystle Hewitt explains in a press release.

“The instructor can also say to viewers, ‘If you look at how I put A into B from the top,’ Stargazer will respond by framing the action with a high angle to give the audience a better view.”

“The goal is to have the robot understand in real time what kind of shot the instructor wants,” adds Li.

“The important part of this goal is that we want these vocabularies to be non-disruptive. It should feel like they fit into the tutorial.”

The researchers invited six instructors to test the robot camera, including a skateboard maintenance tutorial and interactive sculpture-making.

According to the researchers, they all completed their tutorials within two takes without needing any additional controls, and each was satisfied with the quality of the video.

The team is hoping to explore putting the technology into other forms of cameras, such as drones and robots with wheels to help film in different environments other than a tabletop.

Future research could also investigate methods to detect more diverse and subtle triggers from the tutors by combining simultaneous signals from an instructor’s gaze, posture, and speech.

Image credits:Photos courtesy of the University of Toronto.