Samsung Explains the Tech Behind its ‘Faked’ Moon Photos

![]()

Samsung has published a blog post explaining its technology in response to accusations it fakes photos of the moon taken with its Galaxy smartphones and details the steps its artificial intelligence (AI) goes through to produce improved moon photos.

As The Verge points out, this blog post appears to be a lightly edited translation of one published in Korean last year and while it doesn’t reveal much new information on Samsung’s AI processing, it is the first time it is being provided in native English.

In PetaPixel’s coverage earlier this week, a version of the AI model Samsung has shared in the new blog post was included as part of a possible explanation for the results that were alleged by Redditor ibreakphotos. As a recap, ibreakphotos intentionally blurred a photo of the moon with a Gaussian effect to remove any detail, set that photo up on a computer monitor and took a photo of it using his Galaxy smartphone. Despite the lack of detail, the resulting photo captured elements that simply weren’t otherwise visible, which led many to assume Samsung was just overlaying existing images of the moon on top of whatever its internal AI determined could be a person attempting to photograph the current moon.

Samsung denied that it was applying existing imagery over new photos.

“Samsung is committed to delivering best-in-class photo experiences in any condition. When a user takes a photo of the Moon, the AI-based scene optimization technology recognizes the Moon as the main object and takes multiple shots for multi-frame composition, after which AI enhances the details of the image quality and colors,” the company told PetaPixel.

“It does not apply any image overlaying to the photo. Users can deactivate the AI-based Scene Optimizer, which will disable automatic detail enhancements to the photo taken by the user.”

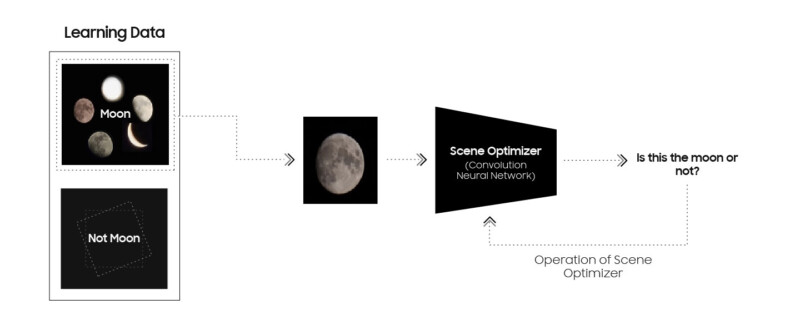

Samsung’s blog post explains the multiple methods it uses and steps it takes to produce a better-looking moon photo — which it says only occur when Scene Optimizer is on — including multi-frame processing, noise reduction, and exposure compensation.

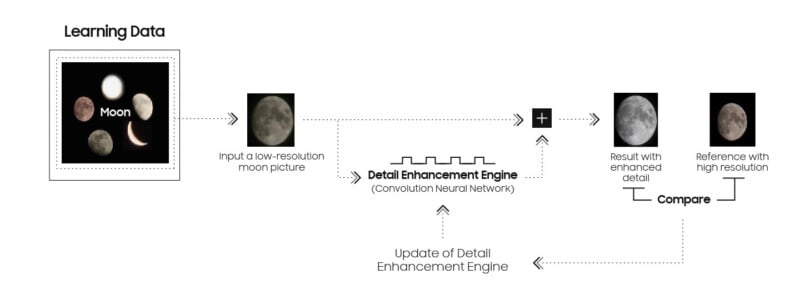

The company also specifically addresses its “AI detail enhancement engine” which, prior to this blog post, was not explained very well.

“After Multi-frame Processing has taken place, Galaxy camera further harnesses Scene Optimizer’s deep-learning-based AI detail enhancement engine to effectively eliminate remaining noise and enhance the image details even further,” the company writes.

The ability of a Galaxy device to add detail that isn’t necessarily visible in the original capture is the crux of the controversy surrounding this technology. As The Verge notes, ibreakphotos claims in a follow-up test that the AI added moon-like texture to a plain gray square which was added onto a blurry photo of the moon. What Samsung’s AI is doing certainly would explain why that happened.

This whole situation has served as a discussion point about computational photography and at what point consumers believe there is too much “thinking” or processing on the part of the phone. For years, many have been asking for computational photography features that are common on smartphones to be somehow integrated into standalone cameras. And while some companies like OM Digital and Canon have been dabbling in it, perhaps the blowback against Samsung here will serve as a cautionary tale.

At a certain point, people are going to start asking if the photo they took is actually a photo or something else. There is, obviously, a point where users think a company has gone too far.

Image credits: Samsung