Samsung Accused of Faking Zoomed Photos of the Moon with AI

![]()

Samsung has been accused of doctoring moon photos taken with the Galaxy S Ultra smartphones with pre-existing images using artificial intelligence to make it look as though the quality is better than usually possible with such a small sensor.

Update 3/14: Samsung has released a statement denying the accusations that it is replacing a user’s capture with an existing photo.

“Samsung is committed to delivering best-in-class photo experiences in any condition. When a user takes a photo of the Moon, the AI-based scene optimization technology recognizes the Moon as the main object and takes multiple shots for multi-frame composition, after which AI enhances the details of the image quality and colors,” the company tells PetaPixel.

“It does not apply any image overlaying to the photo. Users can deactivate the AI-based Scene Optimizer, which will disable automatic detail enhancements to the photo taken by the user.”

The original story is below.

When Samsung first unveiled Space Zoom in the Galaxy S20 Ultra back in 2020, it touted it as an imaging marvel, though it was anything but. Little more than a digital crop on steroids, it melted pixels together into a gooey mess rendering any image unusable. The company has since improved it to the point where you can get something decent in bright conditions, but the moon is a special case.

Not just because it’s most visible at night, but because snapping photos of the moon on the Galaxy S23 Ultra often leads to good results. Perhaps too good. That’s what Reddit user ibreakphotos alleged when conducting a simple test to gauge what the phone might be doing to capture it in detail.

The test was simple enough. They took a photo of the moon, intentionally blurring it using a Gaussian effect to deprive it of any detail. Then, they set up the blurred image on a computer screen and took a photo of it using the phone. Despite the lack of detail, the resulting photo captured elements that simply weren’t otherwise visible.

The original:

The processed result:

Video of the process in action:

The controversy cast further doubt on the legitimacy of the photo itself, suggesting Samsung was misleading consumers over how it actually captures the moon via Space Zoom. Is it just artificial intelligence (AI) doing its thing in the background, or is Samsung merely slapping on an image of the moon on top?

Character Flaws?

Space Zoom came under further scrutiny when yet another Reddit user, expectopoosio, posted a photo seemingly showing Korean or Chinese characters in place of windows on a faraway building. A screenshot of the 12-megapixel photo’s metadata shows it was captured on March 11, 2023 with a 230mm equivalent zoom with 500 ISO and a 1/35 shutter speed. It’s not clear if the person capturing the photo used a tripod or flat surface to take the shot, but there’s enough detail to discern the windows are overlaid by these characters.

Upon closer inspection, the strange effect peters out closer to the edge in the building next to it. Another post in the same thread showed a photo of the same main building taken in brighter daylight, only with fewer windows covered by the characters.

As of this report, there was no metadata for these images, nor context as to whether certain modes or features were on at the time. One user attempted to use Bixby Vision to see if there was any way to translate the characters, only to come away with unintelligible “gibberish.”

Finding Clarity

Samsung has yet to respond to PetaPixel regarding the allegations and hasn’t clarified what its phones do to deliver these results. In the case of the moon, we do have some initial insight based on Samsung’s own explanation when it first unveiled the feature:

The moon recognition engine was created by learning various moon shapes from full moon to crescent moon based on images people actually see with their eyes on Earth.

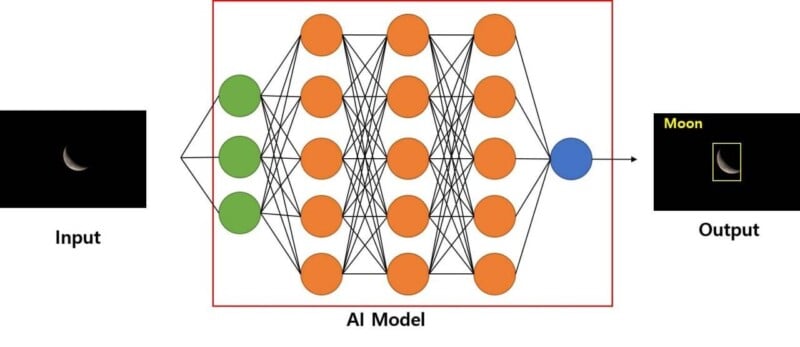

It uses an AI deep learning model to show the presence and absence of the moon in the image and the area (square box) as a result. AI models that have been trained can detect lunar areas even if other lunar images that have not been used for training are inserted.

Other publications exclusively covering Samsung and its products have weighed in, noting both the AI and image stabilization elements involved. Galaxy Ultra phones with Space Zoom best detect the moon when shooting at 25x because that’s when the AI kicks in to darken the sky around it and moderate the moon’s brightness so as not to blow out details. It also enables something called Zoom Lock, which combines optical image stabilization with digital image stabilization to keep things as steady as possible.

Samsung’s claim has long been that it captures multiple frames and smartly merges them together into one shot devoid of noise and blurry defects, not unlike the computational bracketing for shots in Night mode, for instance.

Except Samsung’s “Detail Enhancement” engine is hard to truly grasp because it’s the part that strips away noise and brings out the detail in the image. Samsung has previously stated that it doesn’t apply texture effects or overlay images on top, and yet, it’s difficult to understand how the phone’s AI enhances details from a photo that otherwise had none.

The Moon as a Subject

Samsung isn’t alone in shooting for the moon this way. Xiaomi and Vivo have both included “Supermoon” modes in their respective flagship phones going back at least two or three years. Rather than apply it to the zoom in the main camera, both brands leave it aside as a separate mode that works under any focal length, but is best at 10x zoom or higher.

PetaPixel has reached out to both to further expand on how they capture moon photos, respectively, and will update the story when they comment.

In fairness, the moon is an easy target. It is static, with little depth and essentially lit by the same source at all times. This lends credence to whether any one of these companies are simply slapping something on to help render the results everyone sees. This type of process currently doesn’t work with any other subject, including architectural or natural landmarks where recognition would ostensibly allow AI to help produce a sharper and detailed photo under varying conditions.

True or False

The word “fake” is a serious allegation for any photography standard, but the more damning part of the story may be how brands market capturing the moon from a phone. Phone image sensors and their limited optics make it impossible to capture a detailed shot of the moon without an AI assist, yet it’s not hard to view the advertising language as suggesting just that.

The AI presence in photographing the moon — handheld on a phone — isn’t a mystery. It’s how much of the photo is truly real and how much is interpolated that raises the most questions.