‘Photoguard’ Stops Your Pictures Being Manipulated by AI

A new program may prevent artificial intelligence (AI) programs from manipulating an individual’s photos and using them to create deepfakes.

A team of researchers led by computer professor Aleksander Madry at Massachusetts Institute of Technology (MIT) has developed a program called “Photoguard” which denies AI the ability to manipulate an individual’s photos convincingly.

![]()

In a paper published last month, the research team showed how Photoguard can “immunize” photos against AI edits.

The program uses data poisoning techniques to disturb pixels within a photo to create invisible noise in an image. This essentially renders AI art generators incapable of generating realistic deepfakes based on the photos that it system has been fed and trained on.

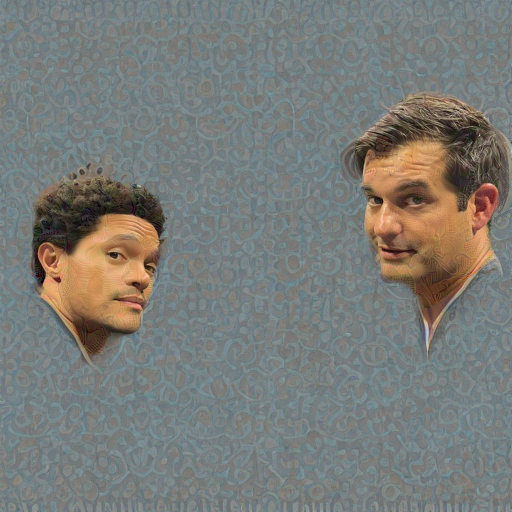

In the paper, the team of researchers uses the Photoguard software on an Instagram photo of comedians Trevor Noah and Michael Kosta attending a tennis match.

![]()

Without the use of Photoguard, it is extremely easy for AI technology to manipulate the comedians’ photograph into another image entirely.

However, when the researchers use Photoguard, the invisible noise that the program created in the photo of Noah and Kosta disrupts an AI image generator from creating a new picture with it.

It essentially makes the original photo immune to malicious edits by AI technology — while being imperceptible to the viewer.

After Photoguard has immunized the original photo of Noah and Kosta, AI is unable to edit the photo and the final result looks a lot worse.

![]()

Last year, Madry also tweeted another example of a photo with Noah that had been successfully immunized against AI with Photoguard.

![]()

![]()

![]()

In an interview with Gizmodo in November, MIT researcher Haiti Salman says that Photoguard only takes a few seconds to introduce noise into a photo.

PhD student Salman says that higher-resolution images work even better as they include more pixels that can be minutely disturbed.

He hopes that the Photoguard technology could be adapted by social media companies so that they could make sure that individuals’ uploaded images are immunized against AI models.

This could make a difference for photos that are uploaded on social media in the future. However, currently, there are millions of photos that have been used to train AI models without the consent of photographers or artists.

More information on the project is available on Madry’s MIT lab blog, with the published paper available on Cornell’s arXiv server under open-access terms.

The code for Photoguard can be accessed here.

Image credits: All photos sourced via “Raising the Cost of Malicious AI-Powered Image Editing” by Hadi Salman, Alaa Khaddaj, Guillaume Leclerc, Andrew Ilyas, Aleksander Madry. and Twitter/@alexs_madry