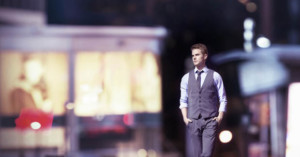

Portraits Shot Using the Brenizer Method, a 400mm Lens, and iPhones for Lighting

Photographer Benjamin Von Wong shot the portrait above a couple of days ago using a Nikon D4, a $9,000 Nikon 400mm f/2.8G lens, and a few iPhones for lighting. The extremely shallow depth-of-field was achieved using 36 separate exposures and the Brenizer Method.