Scientists Invent World’s Fastest Camera That Shoots 156.3 Trillion Frames Per Second

A high-end mirrorless camera can shoot over 100 frames per second (FPS) but it pales in comparison to a camera system researchers in Canada have made that can shoot up to 156.3 trillion FPS.

The camera is called SCARF which stands for swept-coded aperture real-time femtophotography. It was made for scientists studying micro-events that happen too quickly for existing sensors. For example, SCARF has captured ultrafast events such as absorption in a semiconductor and the demagnetization of a metal alloy.

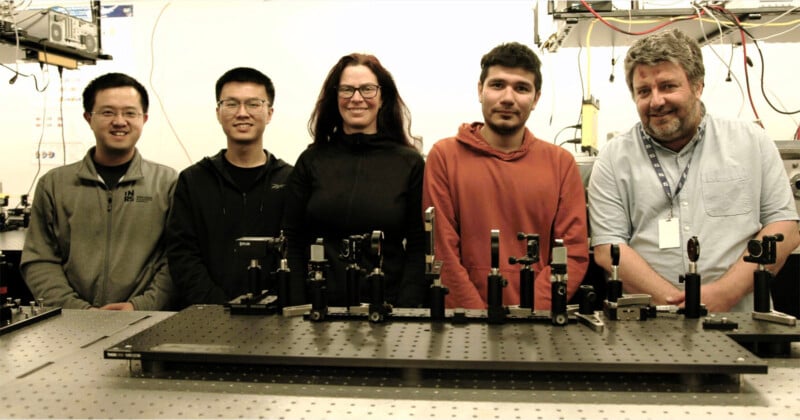

SCARF differs from previous ultrafast camera systems that would take individual frames one at a time and then compile them into a movie to recreate what it had seen. Instead, the team at Énergie Matériaux Télécommunications at the Research Centre Institut national de la recherche scientifique (INRS) in Quebec, Canada, used passive femtosecond imaging which enables the T-CUP system (Trillion-frame-per-second compressed ultrafast photography) which can capture trillions of frames per second.

This research was spearheaded by Professor Jinyang Liang, a pioneer in ultrafast imaging, whose breakthrough in 2018 was the groundwork for this latest project.

“Many systems based on compressed ultrafast photography have to cope with degraded data quality and have to trade the sequence depth of the field of view,” explains Miguel Marquez, postdoctoral fellow and co-first author of the study.

“These limitations are attributable to the operating principle, which requires simultaneously shearing the scene and the coded aperture.”

Professor Liangs adds that “phenomena such as femtosecond laser ablation, shock-wave interaction with living cells, and optical chaos,” may be studied with SCARF but not with previous ultrafast camera systems.

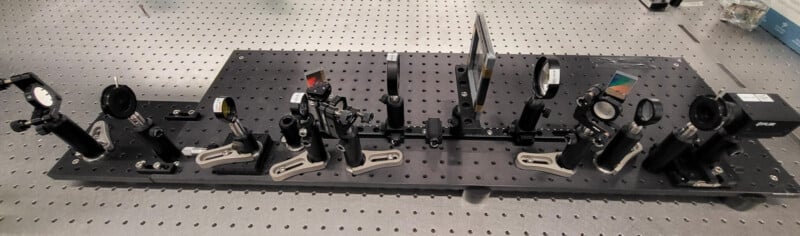

SCARF initiates “chirped” ultrashort laser pulses at 156.3 trillion times per second which travels through the subject the camera is observing. The camera uses a computational imaging modality to capture spatial information by allowing light to hit the sensor at slightly different times. The data is then processed by a computer algorithm that decodes the time-staggered inputs and makes a full picture. According to the team, this allows individual pixels on a camera using a charge-coupled device (CCD) to receive full-sequence encoding speeds of up to 156.3 THz.

Remarkably, the team says it made SCARF “using off-the-shelf and passive optical components.” The research is published in Nature and can be read here.

Image credits: INRS