What Does an AI Really Know About Your Photo?

A photographer and computer scientist wanted to see how much artificial intelligence (AI) can tell him about what’s in a photo with surprising results.

Adrian Graham built a “simple” app called ChatIMG; a multimodal AI that can answer questions about the contents of an image.

He wanted to know if AI Large Language Models (LLMs) can write useful captions for photos. After his experiment, Graham tells PetaPixel that he’s confident the technology is capable of doing this.

“The open-source multimodal model I used (BLIP-2) is very good at describing an image,” he tells PetaPixel.

“There’s a photo of my three-year-old daughter in a ball pit. The caption it wrote is: ‘A child laying in a ball pit filled with blue and pink balls’.”

“In contrast, when I search for ‘ball pit’ in Apple Photos, this photo doesn’t come up. The only photo that does appear is one where Apple’s OCR [Optical Character Recognition] recognized the text ‘ball pit’ on a sign.”

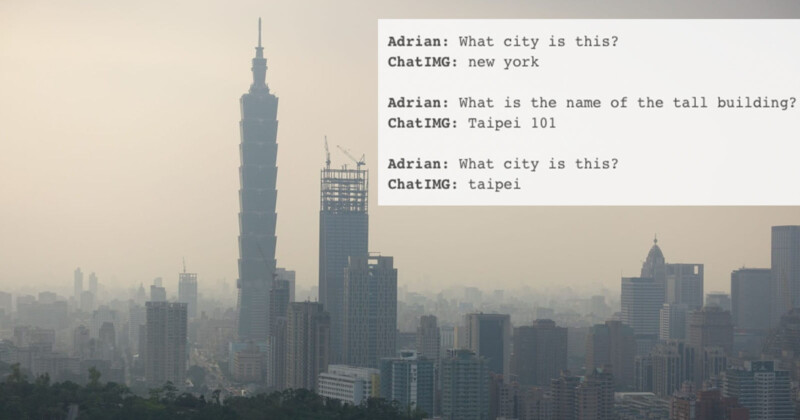

Graham began realizing that his AI model knew more about what’s in a photo than it was letting on.

“For instance, there is an image of a woman standing in front of the Golden Gate Bridge which it captioned as: ‘A woman in glasses standing near the ocean’,” he says.

“I discovered by asking it a couple of specific questions (e.g. What bridge is in the background?), it appeared to know a lot more than was in the original caption,” he explains.

“In this case, it identified both San Francisco and the Golden Gate Bridge, and provided a detailed description of what the woman was wearing.”

“At first I was excited that it seemed to know so much more than what was in the simple caption. However, I quickly discovered that it got these follow-up questions wrong as often as it got them right,” he continues.

“Even worse, it didn’t realize when it was on thin ice. In one test, I gave it a photo of David Bowie from Wikipedia and asked it: ‘Who is this?’ Its response: ‘Bob Dylan’. When I asked how certain it was, it responded with ‘100%’.”

AI is Both Smart and Dumb

Graham was left perplexed as to how the AI can be very accurate but also be totally wrong about something despite having total certainty.

“In the end, I came to think of this model like an alien with a powerful telescope looking down at Earth,” he says.

“It can see almost everything and describe it in good detail, but doesn’t fully understand the cultural context that gives many images their meaning.”

“I wouldn’t count on it to recognize a specific person or be able to explain the irony shown in a piece of street art,” he says.

“However, it does a remarkably good job of describing almost any image literally, which is an impressive accomplishment (and very useful for searching and organization).”

Graham says he was impressed with how good BLIP-2 was at describing a range of different images.

“I’m used to computer vision systems that are trained to recognize specific objects,” he says.

“They tend to work well for the objects they are trained on and don’t know what to make of anything else. This LLM feels different. I could give it any image and the vast majority of the time, it would respond with a reasonable description.”

Multimodal AIs appear to be just around the corner with OpenAI vaunting GPT-4 and Meta working on an open-source system called ImageBind.

Graham originally wrote an article about this subject for his Substack which you can read here.

Image credits: All photos by Adrian Graham unless otherwise stated.