Meta Unveils Open-Source Multimodal Generative AI System

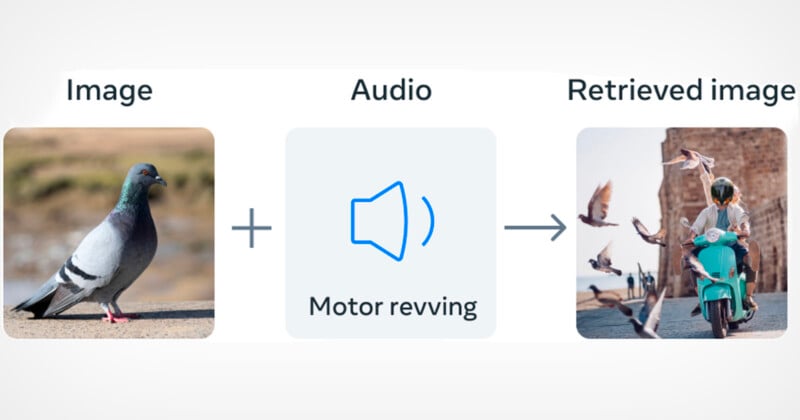

Meta has announced a new generative AI model called “ImageBind” that links together six different modalities; images, text, audio, depth, thermal, and IMU.

The intriguing new artificial intelligence (AI) model is building on how AI image generators like Midjourney, DALL-E, and Stable Diffusion work. These systems link together text and images so the AI can understand patterns in the visual data.

Facebook owner Meta says that ImageBind is the first AI model to combine six types of data into a single “embedding space.” For example, it can create an image from an audio clip — such as creating an image based on the sounds of a rainforest or a bustling market.

The system is intended to mimic the way humans interpret the world which of course is a multi-sensory experience.

![]()

“Today, we’re introducing an approach that brings machines one step closer to humans’ ability to learn simultaneously, holistically, and directly from many different forms of information — without the need for explicit supervision (the process of organizing and labeling raw data),” Meta writes in a press release.

The one modality that you may not recognize is IMU (which stands for intertial measuring unit). This technology is found in phones and smartwatches where they perform a range of tasks including switching a phone from landscape to portrait when the device is physically rotated.

![]()

Right now the model is merely a research project with no practical applications, but the technology is certainly fascinating and its open-sourcing stands out against competitors such as OpenAI and Google who shroud their models in secrecy.

With generative AI becoming a bigger and bigger issue and some warning of potential catastrophes, the training data for AI models is facing more and more scrutiny — with the EU threatening to force AI companies to reveal their data.

As noted by The Verge, companies like OpenAI, which operate ChatGPT and DALL-E and are opposed to open-sourcing, say that rivals can copy its work which could be dangerous if malicious actors take advantage of the AI models.

However, open-source proponents say that third parties scrutinizing an AI’s system could actually help the model by improving a company’s work which could consequenply prove to be of commercial benefit.