Can Artificial Intelligence Perform Sound Mixing Better Than a Human?

In the latest episode of “Film Science,” Syrp Lab shows off the prowess of artificial intelligence (AI) when it comes to sound mixing. Alongside the human vs. AI component, Syrp Lab’s new video discusses ways video editors can achieve better audio with sound mixing, whether using AI-powered tools or not.

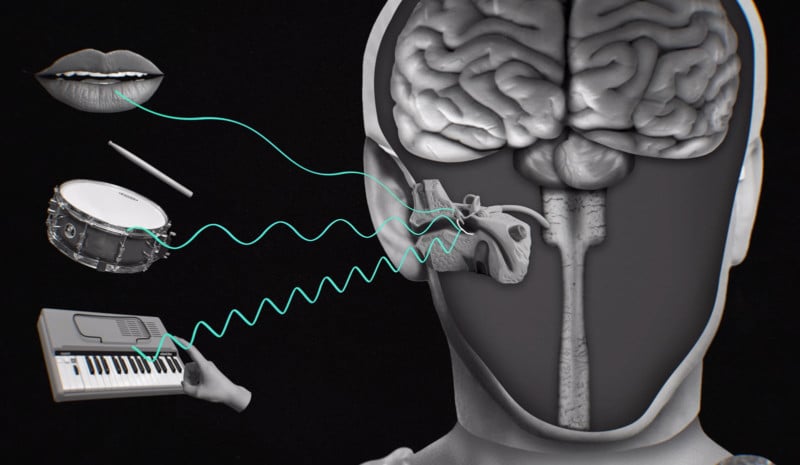

Before seeing what AI sound mixing technology can achieve, it’s important to discuss the fundamentals of sound. At a broad level, a sound is a vibration that travels as energy, or an acoustic wave, through a transmission medium, like air. The human ear and brain work together to transform the sound waves that enter the ear into electrical impulses that can be interpreted as intelligible sounds.

Sound is measured by amplitude and frequency. Amplitude is the height of the sound wave or the strength of a sound. Sound with greater amplitude is louder. Frequency, sometimes referred to as pitch, is the number of times a sound pressure wave repeats per second. The more it repeats, the higher its frequency and pitch. Amplitude is measured by decibels (dB) and frequency by hertz (Hz).

Sound mixing is changing parts of an audio recording to improve the overall quality. This might include changing the amplitude of specific sounds to expand or reduce the audio dynamic range. It may instead involve adjusting frequencies to change the pitch of different audio sources. Like with editing basic parameters of a photo, like exposure and color balance, sound mixing can be motivated by technical reasons, like making a person easier to hear and understand, and artistic ones, such as conveying different emotions by altering a soundscape.

Syrp Lab enlisted the help of Alex Knickerbocker, a professional recordist, sound engineer, and audio mixer, to help explain how to use sound mixing to adjust amplitude and frequency to produce better-sounding audio.

“With mixing specifically, it’s all about taking really well-done source materials and balancing them to accentuate their cinematic feel and appeasement,” Knickerbocker says.

While mixing sound for YouTube differs from Knickerbocker’s work for major motion picture studios, the same basics apply.

“You want to get good, clean dialogue recorded. Once you have dialogue in, it’s all about making it as listenable as possible,” he adds.

This includes removing clicks, pops, and other distracting noises. This also includes using equalization (EQ) to change the shape of sound (amplitude and frequency) to make voices easier to hear and understand and more pleasant to listen to.

When editing a video that includes dialogue and a background music track, a common scenario for many content creators, good sound mixing isn’t a matter of just adjusting the volume (amplitude) of the music until it’s easy to hear the dialogue; it involves modifying the frequency of each audio track to ensure that they accentuate each other, rather than compete for the viewer’s ear.

This ties into the three basic steps for mixing typical audio, as Syrp Lab outlines. The first step is using equalization on the mic, ensuring that there aren’t any harsh frequencies that are unpleasant to listen to. Next is compression, which evens the overall amplitude of audio to prevent especially quiet or loud sounds. The final step is performing equalization on background sounds to eliminate competing frequencies.

![]()

However, perhaps with AI sound mixing tools, such as the AI dialogue leveler and AI voice isolation released in DaVinci Resolve 18.1, manual mixing skills are unnecessary. Syrp Lab tests this by putting three hand-mixed audio samples up against DaVinci Resolve’s and Adobe Audition’s AI sound mixing tools.

As it turns out, according to the judges (Syrp Lab employees), DaVinci Resolve’s AI tools perform exceptionally well when isolating dialogue in noisy environments. However, there’s still a subjective element to sound quality that AI can’t handle — at least not yet.

Knickerbocker says that AI’s best use case for audio mixing now is cleaning up audio. This is especially true when you have poorly recorded audio that makes it difficult to hear someone speaking.

“AI tools can help people who really don’t know anything about audio restoration get something that’s passable out of something that was utter garbage before,” says Knickerbocker.

Image credits: Syrp Lab