New LG Mouse Can Scan Photos with the Flick of a Wrist

In the future, after you print photos onto paper using your camera, you’ll be able to scan …

In the future, after you print photos onto paper using your camera, you’ll be able to scan …

Facial recognition technology has become ubiquitous in recent years, being found in everything from the latest compact camera to websites like Facebook. The same may soon be said about location recognition.

What if in the future, the human eye itself could be turned into a camera by simply reading and recording the data that it sends to the brain? As crazy as it sounds, researchers have already accomplished this at a very basic level.

You might soon be able to control Nikon DSLRs using only your emotions. A patent published recently shows that …

A company called Lytro has just launched with $50 million in funding and, unlike Color, the technology is pretty mind-blowing. It's designing a camera that may be the next giant leap in the evolution of photography -- a consumer camera that shoots photos that can be refocused at any time. Instead of capturing a single plane of light like traditional cameras do, Lytro's light-field camera will use a special sensor to capture the color, intensity, and vector direction of the rays of light (data that's lost with traditional cameras).

[...] the camera captures all the information it possibly can about the field of light in front of it. You then get a digital photo that is adjustable in an almost infinite number of ways. You can focus anywhere in the picture, change the light levels — and presuming you’re using a device with a 3-D ready screen — even create a picture you can tilt and shift in three dimensions. [#]

Try clicking the sample photograph above. You'll find that you can choose exactly where the focus point in the photo is as you're viewing it! The company plans to unveil their camera sometime this year, with the goal of having the camera's price be somewhere between $1 and $10,000...

If you think the 5-megapixel sensor found on the iPhone 4 is good, wait till you see the camera found on the next iPhone -- it's reportedly going to be a 8-megapixel sensor made by Sony. The Street wrote back in 2010 that the next version of the iPhone to arrive in 2011 would pack an 8-megapixel Sony sensor rather than the 5-megapixel OmniVision one found in the current phone, and Sony's CEO Howard Stringer seems to have confirmed that today in an interview with the Wall Street Journal.

The blogosphere is abuzz today over a rumor that Canon and Apple may be planning to collaborate on an …

If you thought Google Earth was cool, check out the work being done by Swedish corp …

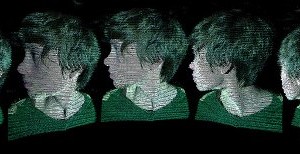

Kyle McDonald is a programmer working on building open source utilities for realtime 3D scanning using structured light, a technique that requires only a projector and a cheap camera.

Adobe is working on a new feature for Photoshop called "Content Aware Fill", and posted a mind-boggling demonstration of it on YouTube.

Students at the University of Tromso in Norway have created an interactive display wall using 28 separate projectors, which creates a 7168x3072, or 22 megapixel, display. Interactive with the display simply involves placing your hands in front of it. Touching the display itself is not necessary, and multitouch is supported. What better way to demonstrate the capabilities of such a system than zooming through a gigapixel photograph?

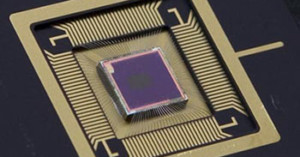

InVisage, a California-based start up company, has announced a new image sensor technology that it claims is up to four times more sensitive than traditional sensor technologies.

Their product, QuantumFilm, is a layer of semiconductor material added on top of the traditional silicon that uses quantum dots to gather light.

In the future, we might be able to roam around a 3D virtual representation of our world, where everything you see was automatically generated from photographs taken at the real locations.

Vision researchers at the University of Washington and Cornell University have been working on turning photographs of things in the real world into 3-dimensional representations. This research could eventually turn snapshots into virtual buildings, neighborhoods, and possibly cities.