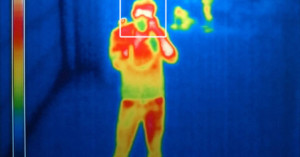

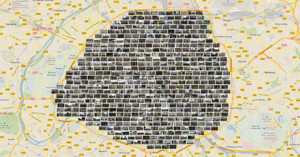

Scientists Building Security Cameras That Can “See” Crimes Before They Happen

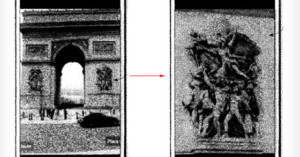

Remember those weird floating "precog" psychics in the movie Minority Report? They could foresee crimes before they even happened, allowing law enforcement to prevent them from ever becoming a reality. While that kind of sci-fi foreknowledge will almost certainly never exist, scientists are working on an eerily similar system that uses cameras and artificial intelligence -- a system that they hope will be able to "see" crimes before they even occur.